A Local Contrast Fusion Based 3D Otsu Algorithm for Multilevel Image Segmentation

Ashish Kumar Bhandari, Arunangshu Ghosh, and Immadisetty Vinod Kumar

Abstract—To overcome the shortcomings of 1D and 2D Otsu’s thresholding techniques, the 3D Otsu method has been developed.Among all Otsu’s methods, 3D Otsu technique provides the best threshold values for the multi-level thresholding processes. In this paper, to improve the quality of segmented images, a simple and effective multilevel thresholding method is introduced. The proposed approach focuses on preserving edge detail by computing the 3D Otsu along the fusion phenomena. The advantages of the presented scheme include higher quality outcomes, better preservation of tiny details and boundaries and reduced execution time with rising threshold levels. The fusion approach depends upon the differences between pixel intensity values within a small local space of an image; it aims to improve localized information after the thresholding process. The fusion of images based on local contrast can improve image segmentation performance by minimizing the loss of local contrast, loss of details and gray-level distributions. Results show that the proposed method yields more promising segmentation results when compared to conventional 1D Otsu, 2D Otsu and 3D Otsu methods, as evident from the objective and subjective evaluations.

I. INTRODUCTION

IMAGE multimodal thresholding is frequently used to obtain image subdivisions in many image processing modules.During the image separation process, pixel values are categorized into several sections on the basis of their gray scale values. Towards this end, different segmentation methods have been proposed, where thresholding-based segmentation is easy and efficient. The foremost task in thresholding operation is choosing the optimal threshold point for image intensity segmentation. Among the existing image thresholding methods, Otsu’s approach is considered as one of the most popular and efficient. In general, image segmentation is utilized in numerous image processing applications like object detection, feature classification, in bio medical domain [1],and more [2]-[4]. In the image thresholding process, if a single threshold point is selected to generate two regions, then it is known as bi-level thresholding process which is used to distinguish a particular class from its background. Although the bi-level model is simple to implement as compared to multimode thresholding operations, in multi-level operations intricacy rises and precision decreases due to the introduction of various threshold levels during the searching process [5]. Image thresholding approaches are classified into two methods known as parametric and non-parametric [6], [7]. Parametric procedures use probability density function (PDF) for finding a region, but are associated with high computation cost. Nonparametric routines exploit between-class-variance, entropy value and error rate [8]-[10]. These aforementioned methods are generally considered for searching threshold points because of their accuracy and robustness [11].

In this context, Nobuyuki Otsu introduced the Otsu approach; [12] a distinct analogue of Fisher’s discriminant study [13]. This scheme works by choosing suitable threshold values through a meticulous search process which maximizes variance among classes leading to image separation.Numerous techniques [14]-[16] have been presented to improve the traditional Otsu approach, yet these schemes are unable to obtain suitable results for coloured and weakly illuminated images. To overcome these issues, Liuet al. [17]anticipated the 2D Otsu concept, which finds a suitable threshold on the basis of 2D histogram patterns. The 2D histogram has been built by merging gray scale values with spatial correlation details. This technique has shown resilience to distorted images or noisy pictures. The probabilities of pixels regarding the foreground and background region are neglected in the case of second and third classes of 2D histogram which were close to their optimal threshold vector,resulting in unsatisfactory segmentation results. Jinget al.[18] proposed a technique that produces best variance among pixel clusters on the basis of 3D histograms called the 3D Otsu function. In this method, a 3D histogram concept has been described that considers the median of neighbourhood elements as the third parameter, along with pixel’s gray scale details and neighbourhood means. This approach is reportedly projected to offer superior thresholding performance for distorted images and a lower signal-to-noise ratio than previous 1D and 2D methods.

Although the addition of the third element increases the execution cost toO(L3) fromO(L) in 2D Otsu, numerous improved versions have been suggested. A set of recurrence formula of 3D Otsu function has been developed which excludes redundancy in formulation by presenting a look up routine. Thus, execution cost was decreased toO(L2);however, the processing time for examining the look-up routine was high. In the past, an identical 3D Otsu function was presented which provides reasonable outcome for distorted pictures while decreasing the execution cost fromO(L3) toO(L). In order to solve this problem, an efficient iterative procedure was suggested which produces higher accuracy and superior image information for a thresholded picture. Additionally, execution time decreased by 20%.These improved methods are associated with exhaustive exploration which requireL×L×Literations. 3D Otsu fails to deal with these aforementioned issues in a satisfactory manner. Therefore, in this paper, the outcomes of 3D Otsu’s method, i.e., the segmented images, are enhanced using a fusion method based on local contrast.

The major limitation of the proposed approach is high processing times due to exploitation of a 3D model. However,it can be stated from the governing equations that the traditional 3D Otsu approach needs higher execution time than 1D and 2D Otsu for searching optimal thresholds.

In order to solve these issues, a fusion approach which works on image local contrast [19] is combined with 3D Otsu objective function to compute accurate segmentation outcomes. Simply, local contrast works on the basis of gray scale differences in a specific local area of an image. In addition, there is further formulation for the local contrast concept, such as logarithmic image processing (LIP). In the field of image contrast boosting, fusion of input image and the corresponding enhanced output yields superior outcomes as compared to the individual approaches. Inspired by this mechanism, we have followed a similar formulation, and propose an image thresholding based segmentation in which fusion of the input image and the thresholded images are performed to obtain superior segmentation outcomes. In the image heightening area, the final fused processed images outcomes performed better than the individual enrichment procedures. Comprehensive information with regards to the fusion concept-based image detail boosting has been provided in [19]. The fusion based local contrast method has been applied to 1D, 2D and 3D Otsu’s method, and parametric analysis has been carried out to evaluate the experimental results.

The remainder of the paper is structured as follows. In Section II, 1D and 2D Otsu functions are discussed in detail.Section III describes the fundamental formulation of 3D Otsu concepts and the anticipated work in brief. In Section IV,simulation outcomes and comparison assessment are illustrated, and finally conclusions are presented in Section V.

II. MOTIVATION AND PREVIOUS WORKS

Several multimode image thresholding routines have been reported over the years. In general, image thresholding-based color subdivision lacks accuracy in apportioning vague areas due to the existence of compact information and tiny abrupt deviations in images. On the other hand, 3D Otsu function provides appropriate elucidation for searching many threshold points despite its higher running time. Yet, multimodal based image separations calculated through classical approaches yield complications due to high computational cost to find optimal results.

1D Otsu thresholding uses 1D histogram of an image, which only depicts the gray-level distribution, and does not account for spatial information between pixels of the image.Therefore, thresholding performance may be reduced when the signal to noise ratio (SNR) goes down and the complexity is increased. To overcome these problems, 1D [6]thresholding is extended to 2D [17] and 3D [19] Otsu’s procedures which considers the neighboring pixel’s information as well. While extending the dimensionality of the 1D Otsu method may solve issues with respect to thresholding performance, 2D and 3D Otsu methods use exhaustive searches to find optimal thresholds. This problem intensifies in the case of 3D Otsu as the method uses a 3D histogram technique. Despite this, 3D Otsu provides better thresholding outcomes for lower SNR and distorted images,although at the cost of high execution time.

Summing of spare elements such as the mean and median raises the segmentation performance of 3D Otsu scheme above 1D and 2D Otsu. By exploiting these information, 3D Otsu method yields suitable thresholds by minimalizing the variance among regions. Through the use of 3D Otsu method,the work described in this paper decreases the execution cost fromO(L3) toO(L). In this work, 3D Otsu is used as an objective criterion with fusion concepts for image segmentation, giving it the possibility of usage in proficient multimode segmentation approaches over a wide classes of regions. Besides, a thorough state-of-the art evaluation has been shown to establish the proposed approach. In order to better exploit the effects of structural area and detail, the concept of fusion has been incorporated into the multimode thresholding operation. Thus, this paper presents a segmentation method that is powerful not only in terms of accuracy and efficiency, but the technique can also be applied to solve a wide variety of problems in color images.

Furthermore, fusion approaches depend upon differences between intensity values in a small local space of an image.The aim of the fusion method is to improve localized information after the thresholding process. The local contrast image fusion can improve image segmentation performance by minimizing the loss of local contrast, details and gray-level distributions. Therefore, in the proposed approach, fusion of original and thresholded images have been carried out to preserve the finer information from the thresholded image and the original image in the best possible manner. The presented algorithm considerably raises the precision of thresholded images without disturbing input features and finer points of the image. Thus, it can be applied in several imaging applications such as feature extraction, bio medical imaging and industrial inspections.

A. 1D Otsu

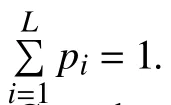

For a specified imageI, the total intensity levels can be stated asLin the range given by [1, 2, 3, …,L]. If, the total number of pixels inIisNand the number of pixels with gray leveliis denoted byni, then, the probability of occurrence of a pixel is:

The total mean is defined as

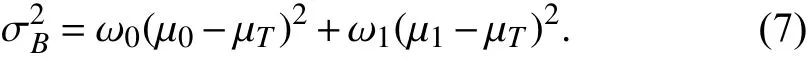

The between-class variance of two classesC0andC1is given by

B. 2D Otsu

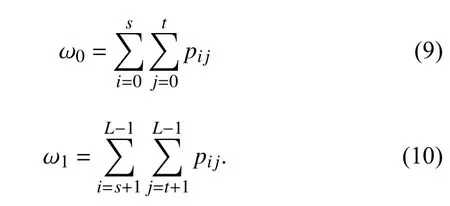

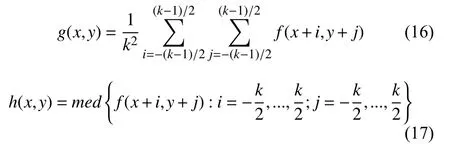

Consider that the pixels of a given image may be divided into two classes: backgroundC0and foregroundC1, by a threshold vector (s,t). In 2D Otsu’s routine [20], a twodimensional histogram pattern is utilized that is formed by considering intensity information and the mean of neighboring elements. The neighborhood mean is computed through (16).The probabilities of occurrence of these two classes are formulated as:

Mean ofC0andC1is given by:

The total mean of 2D histogram is

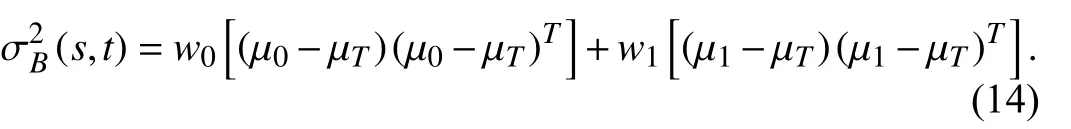

The between class variance is formulated as

The optimal threshold vector is

III. PROPOSED ALGORITHM

In this section, a proficient approach to produce the suitable multimode threshold point using 3D Otsu technique coupled with fusion concept has been presented. The detailed processes of the stated algorithm are described as follows.

A. 3D Otsu

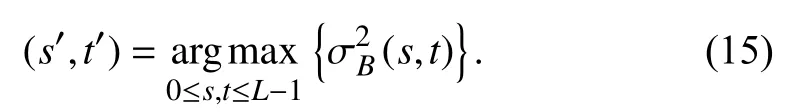

In 3D Otsu, the histogram is created by considering gray scale of elements in agreement with their spatial details containing the neighborhood mean and median. Consequently,it delivers superior noise resistance than the 1D and 2D Otsu scheme. If an imageIwithLgray levels andNnumber of pixels is to be measured; the gray scale points of element (x,y) of the picture can be represented viaf(x,y), while the mean and median gray point ofk×kneighborhood of pixel can be symbolized byg(x,y) andh(x,y), respectively, theng(x,y)andh(x,y) can be formulated as follows

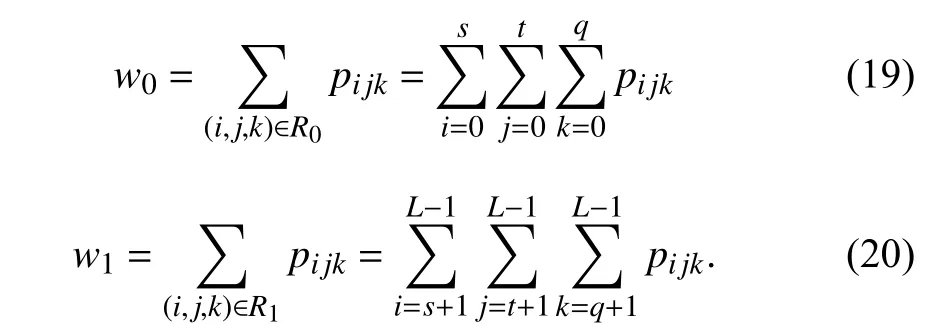

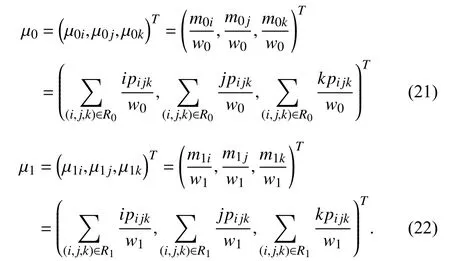

where the cost ofkhas been selected as 3 for this work. For each pixel in pictureI, mean and median costs have been computed in thek×kneighborhood. Afterward, these costs have been consolidated in a triple channel form (i,j,k) whereiis the input gray scale element in picturef(x,y),jis the gray scale element of neighborhood mean point in pictureg(x,y)andkis the gray value of neighborhood median point in pictureh(x,y). The 3D Otsu histogram in Fig. 1 is created by individually considering variablesf,gandhalong withx-axis,y-axis andz-axis. Each of the triplets of the picture as mentioned in 3D histogram has been constrained within a cube ofL×L×Las the gray scale elements of mean and median gray points which are also denoted byL. At any instant in the histogram, the likelihood of vector (i,j,k) is expressed as

Let (s,t,q) be a random threshold vector which distributes the 3D histogram into eight rectangular volumes as illustrated in Figs. 1(a)-(d). In Fig. 1(b), class 0 relates to background,class 1 associates to object region and 2-7 classes regions associated to intensity close to the edge area. The likelihood of each pixel in the class 2-7 can be estimated as 0. This relationship is established due to the number of pixels close to the boundary area being much less than the number of gray elements in the entire picture.

LetC0andC1signify classes 0 and 1 conforming to background and object, respectively. Thus, partitioning the picture with random threshold vector (s,t,q), the likelihoods ofC0andC1can be represented as:

The mean vectors ofC0andC1can be expressed as:

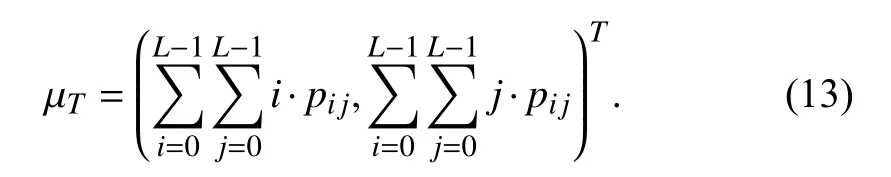

The entire mean vector for 3D histogram is expressed as

The between class variance is formulated as

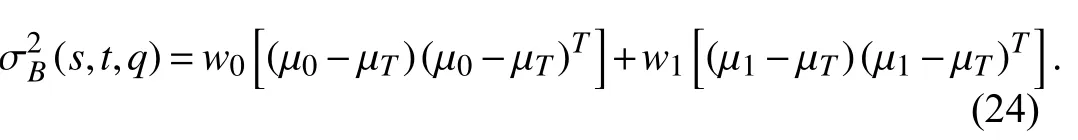

The optimal threshold vector is stated as

Lastly, for diverse threshold pointsM,

B. Fusion Based on Local Contrast

The foremost aim of image enrichment is to improve the perception of humanoid viewers with respect to the details in pictures or to deliver superior features as compared to a set of raw data [21]-[23]. However, these approaches are still incapable of handling few issues such as loss of original color,tiny details and local contrast. The possible elucidation for such kind of distortion can be achieved through image local contrast based fusion approaches. Hence, a novel image is synthesized by merging the thresholded image and the input image to produce better segmentation results.

In this work, the aforementioned image fusion concept [19]has been implemented with 3D Otsu objective criteria.Fundamentally, a local contrast is based on the variance among the pixel values within a narrow limited region of the picture. LetPi(i= 1, 2) be two different intensity level pictures that are normalized. Then, the local contrast can be formulated as follows:

whereJi(p,q) shows the 3×3 localized picture ofPiat location (p,q). min(.) and max(.) show the least and extreme intensity costs of the local picture, correspondingly. Thus, the local contrast is designed through the transformation of the intensity cost in each 3×3 localized picture. There are also other terminologies for local contrast concepts, such as those for logarithmic image processing (LIP) [24], [25].

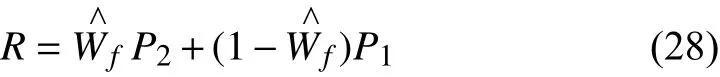

The image fusion method intended through the above local contrast formulation is for digital pictures. For a particular point (p,q), let the variance amongM1andM2beN=M2-M1. Thus, the fusion weight function is computed as:

wherepandqare two fixed values in the smoothly growing sigmoidal patternWf. The abruptness in the location ofand the mean cost location (specifically 0.5) ofWfare attuned by the constantspandq. In this work, permanent values are set forpandq,p= 0.5 andq=-min(N)/(max(N)-min(N)).The function-min(N)/(max(N)-min(N)) denotes the normalized transformation. The benefits of setting the fusion weight criteria as a sigmoidal function instead of a linear function allows it to overcome limitations of linear functions by conserving the beneficial qualities of two pictures in a welldefined manner. Hence, the fusion picture can be denoted as

where the normalized fusion weight function is denoted byA straightforward explanation towards using normalized fusion weight criteria is that when, then the imageP1will be dominant overP2in the image fusion, as the local contrastM1is more overriding than that ofM2, and vice-versa for

C. Fusion for Color Image Segmentation

The presented fusion scheme is suitable for gray scale pictures and can also be executed for colored images. In order to obtain a fused image for color version, red-green-blue(RGB) colored picture is converted into a hue-saturation-value(HSV) color form, and only the value elements are included in the fusion procedure. Hence, the resulting fusion picture is a mixture of the fused value element and the hue cum saturation elements of the input picture. Evidently, for color image multimode thresholding, the input data is fused with the segmented data instead of the enhanced image.

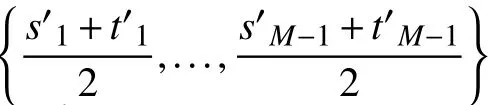

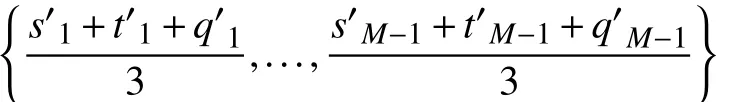

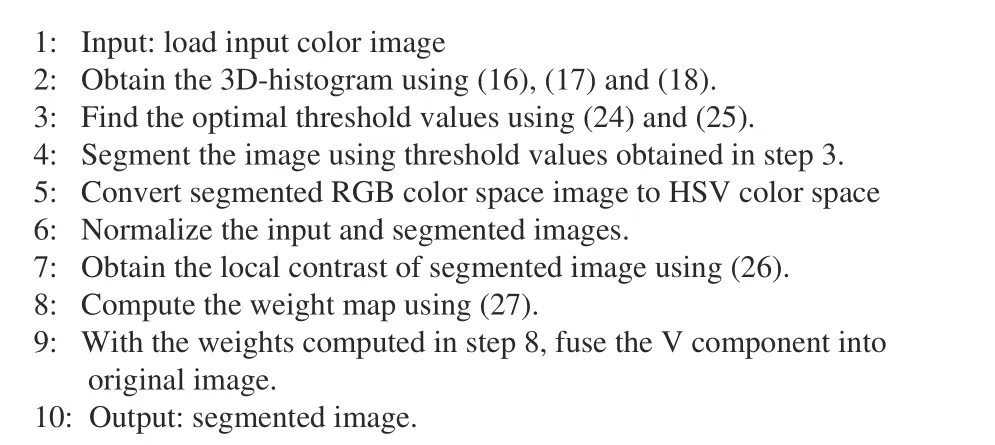

D. Fusion Based 3D Otsu Algorithm

In this part, fusion with 3D Otsu is discussed. In the thresholding process, if the number of levels is raised, then the number of new bounds also grows, leading to a greater execution cost, which should be minimized. At each iteration,it collects a new set of threshold values to satisfy the objective criteria which improves the merit of the segmented pictures.

In 3D Otsu’s routine [26], pictures are separated into a predetermined number of small regions using thresholding points. For instance, computational cost rises as the threshold level rises because of the modality and the limitations in the search area. Yet, the variance among regions through (24) is decreased via the 3D Otsu function. Accumulation of a third element, i.e., median, raises the separation class of pictures as compared to 1D and 2D Otsu. By using of a greater number of threshold points, the color image segmentation performancevalues improve. Initially the image is segmented using conventional 3D Otsu for different thresholding levels. The segmented image is then processed with local contrast based fusion techniques for improved segmented results. The presented approach has the merit of both input picture and segmented data that form a hybrid segmentation image.

The complete pseudo-code of the proposed fusion-3D Otsu multilevel color image thresholding is provided in Fig. 2.

IV. EXPERIMENTAL RESULTS

In this part, an exhaustive simulation outcome along with the performance evaluation tables and visual demonstration are presented. An evaluation of related thresholding approaches are illustrated with 1D Otsu, 2D Otsu and 3D Otsu functions.

Fig. 2. Pseudo-code of the proposed algorithm.

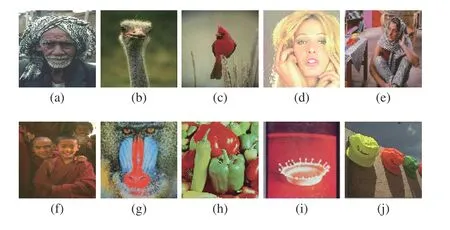

A. Image Data Set

In this work, presented fusion-3D Otsu routines are validated through a number of standard images from the Berkeley data set [27] and Kodim dataset [28]. This set includes ten miscellaneous colored pictures. Each of the images are in JPEG format and are 256×256 in size, as shown in Figs. 3 (a)-(j). Typically, RGB images have three color channels: red, green and blue. Thus, the search for accurate threshold points are computed on separate channels of the original image. To check the competency of 3D Otsu approach, a broad simulation at different threshold levels have been performed for each method and six performance metrics are included to examine the quality of the output images.Different quality assessment metrics are given, including mean error (ME) [29], mean squared error (MSE) [30], peak signal to noise ratio (PSNR) [31], structural similarity index module (SSIM) [32], feature similarity index module (FSIM)[32], entropy [29], Jaccard/Tanimoto error (JTE) [33], and normalized absolute error (NAE) [33].

Fig. 3. Represent original test images [27], [28].

B. Simulation Setup

In this experiment simulations are done at the 2, 3, 5, 8 levels of threshold, and the overall procedure is implemented on MATLAB R2017a on Windows 10 using Intel? Core i7 CPU @ 3.6 GHz processor with 8 GB of RAM. The introduced fusion-based 3D Otsu approach is mainly compared with closely related traditional 1D and 2D Otsu methods in addition with their fusion versions.

C. Performance Assessment and Comparison

In this unit, comparative studies has been carried out with prevailing 1D and 2D Otsu’s method. The objective is to establish the efficiency of the proposed fusion-based 3D Otsu algorithm, especially when applied to color images. For evaluation purpose, the proposed method has been compared with fusion-based 1D and 2D Otsu’s method. In 1D Otsu’s method, only pixel values have been considered for the histogram, whereas in 2D Otsu, neighborhood mean is also included.

In 3D Otsu an extra component, median, is added for histogram construction. Accordingly, 3D Otsu incorporates extreme details from the raw picture and utilizes this data during the thresholding operation. Therefore, the proposed fusion technique based on local contrast method applied with3D Otsu technique computes more accurate segmented images. Using the proposed method, the quality of the segmented images in terms of contrast and loss of detail in lower level thresholds are increased.

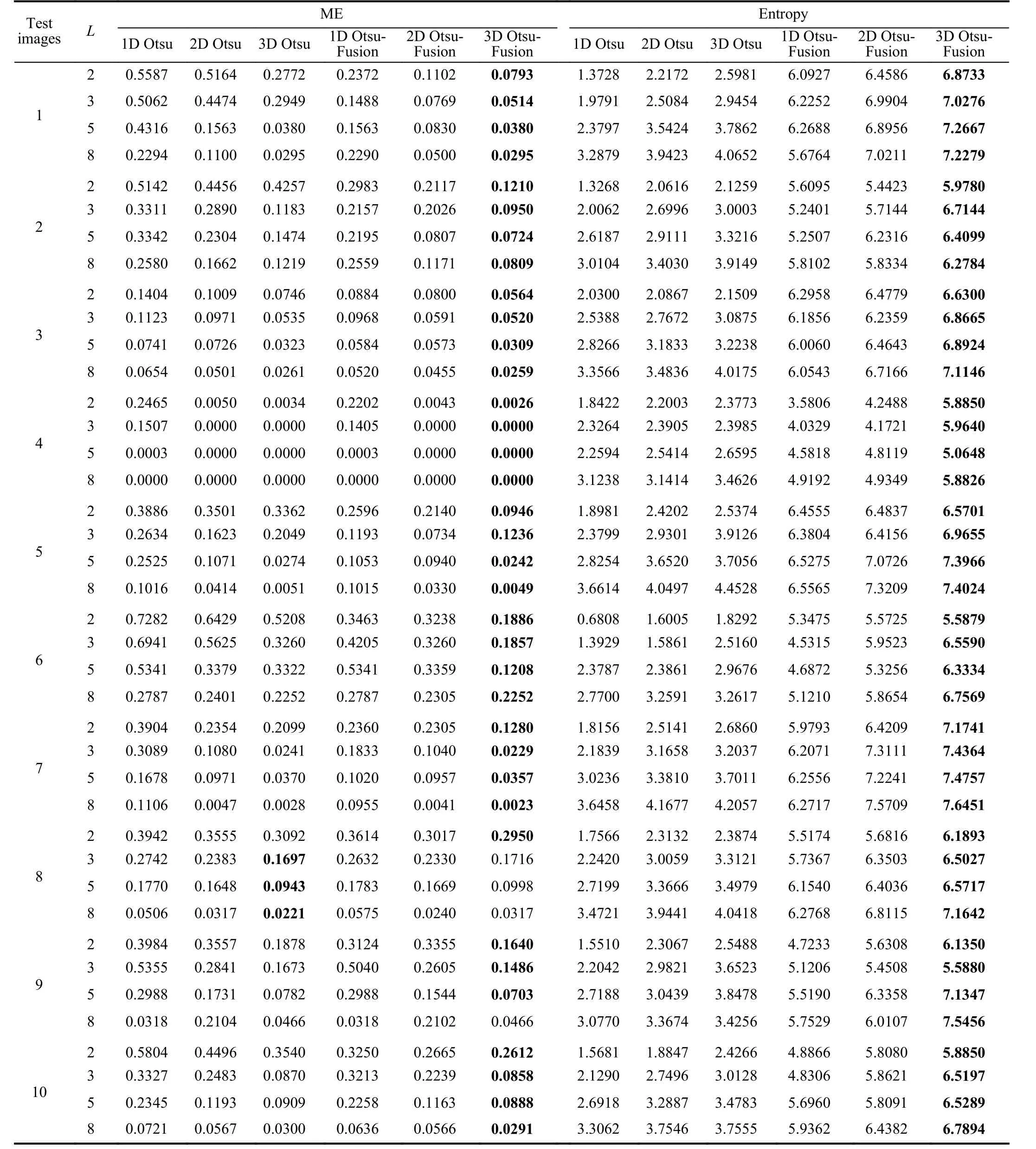

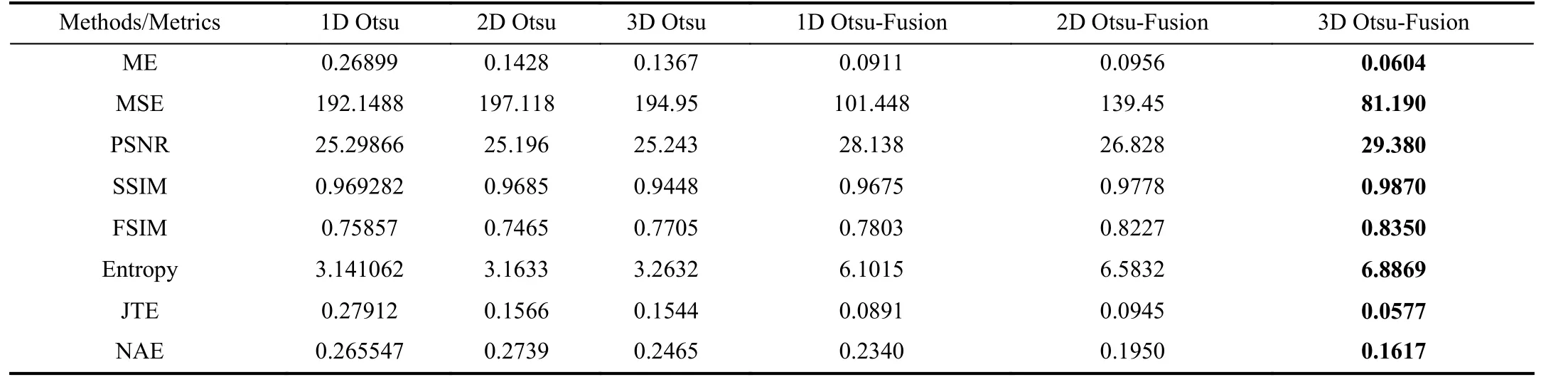

TABLE I COMPARISON OF ENTROPY AND ME COMPUTED BY DIFFERENT ALGORITHMS

Table I presents the comparison of ME and entropy values of 1D, 2D and 3D Otsu’s method realized with the proposed fusion method. 3D Otsu improves upon the outcomes of fusion based 1D and 2D Otsu methods. The proposed method produces higher entropy values for all the tested color images.Higher entropy of an image indicates more informationcontent in the segmented images, justifying the primary objective of the proposed method.

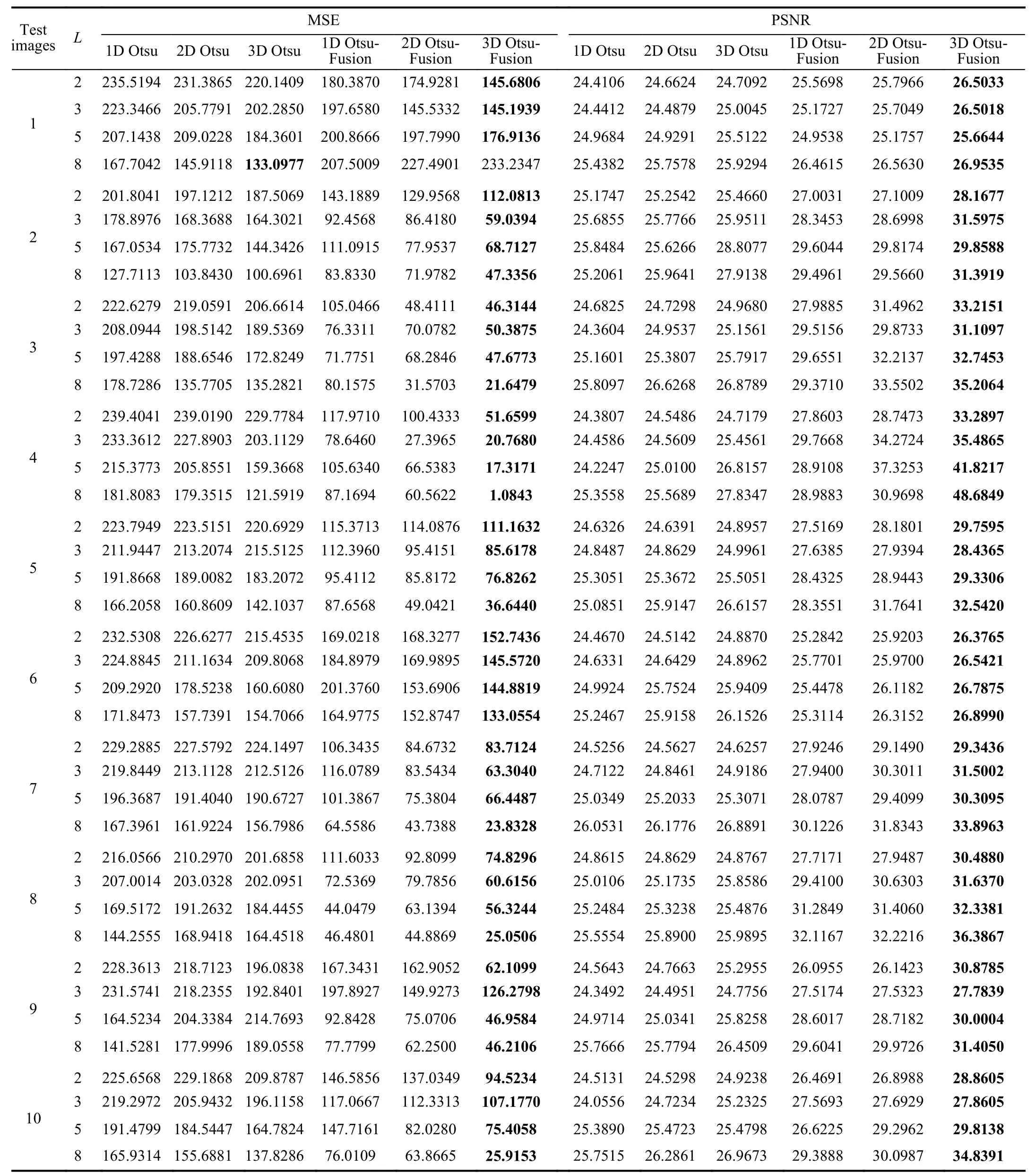

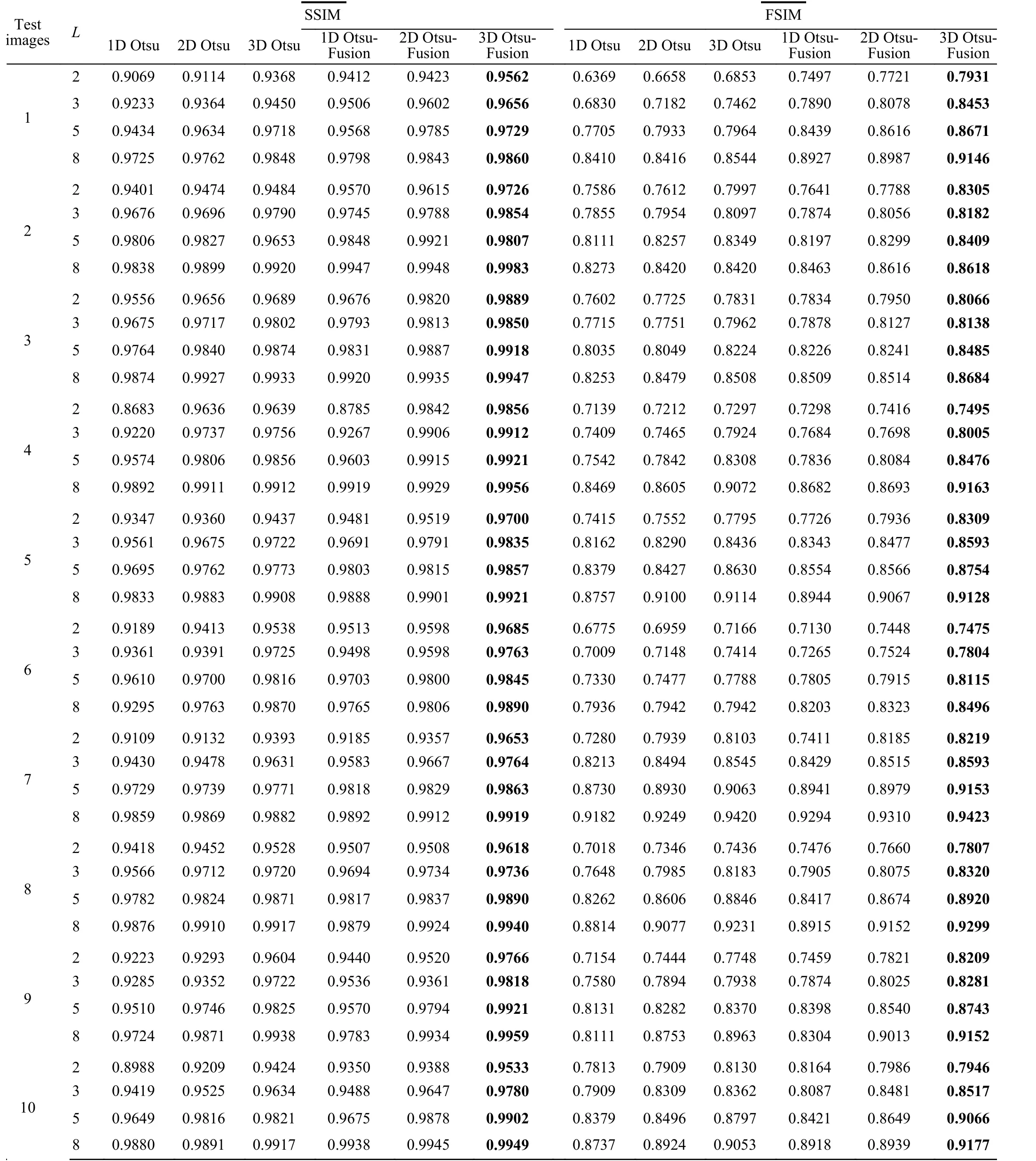

TABLE II COMPARISON OF MSE AND PSNR COMPUTED BY DIFFERENT ALGORITHM.

Table II reports MSE and PSNR values. Table III presents the image eminence performance parameter SSIM and FSIM of each Otsu method along, with the proposed fusion scheme separately. Higher values of these parameters, including PSNR reports the precise demonstration of the original picture that should be in a thresholded picture. On the other hand,MSE values should be as low ss probable. Experimentally, the outcomes of these quantity metrics parameters are due to themerits of the presented 3D Otsu multimodal thresholding method.

TABLE III COMPARISON OF SSIM AND FSIM COMPUTED BY DIFFERENT ALGORITHM

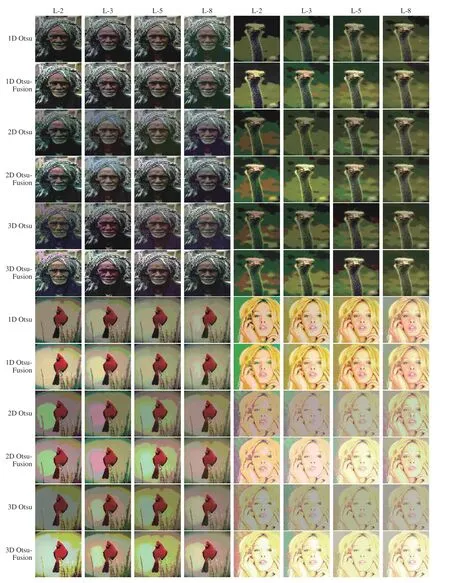

Fig. 4. Comparison of segmented images for farmer, ostrich, bird, lady image at various levels using different segmentation methods and proposed algorithm.

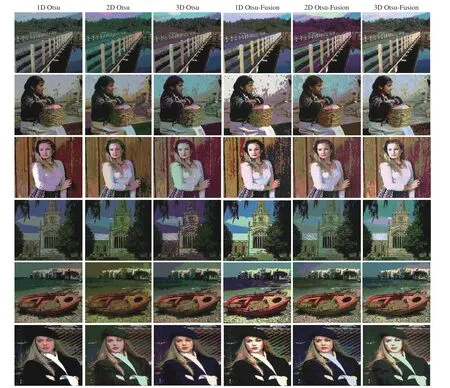

An effective 3D Otsu multilevel approach is proposed based on fusion concept. Subjective evaluation reveals that the segmented outputs illustrated in Figs. 4-6 yields rich gray shade and a pleasing display profile. Gray level distribution in each separated class is conserved well as thresholding levels(K-1) increase. Objective assessment of the segmented outcomes obtained by the proposed 3D Otsu-Fusion and other approaches (1D Otsu, 2D Otsu, 3D Otsu, 1D Otsu-Fusion, and 2D Otsu-Fusion) are presented in Tables I-III, where bold faces show the best finding.

Fig. 5. Comparison of segmented images for Barbara, monk, baboon, pepper image at various levels using different segmentation methods and proposed algorithm.

The overall assessment of the 1D Otsu, 2D Otsu and 3D Otsu-based methods have concluded that 3D Otsu gives superior results as compared to the alternative methods for almost every data point. In addition, the achieved simulation outcomes illustrate that the fusion-based segmentation method retains impressively tiny details with improved contrast levels.Hence, the segmented outcomes have improved and the contrast of the image is also enhanced. With the aid of fusion,precision along the edges of the thresholded images increases.

Fig. 6. Comparison of segmented images for paint, caps image at various levels using different segmentation methods and proposed algorithm.

Experimentally, edge exposure is also seen in few of the fused outcomes which helps recognize unseen details in the raw data. Thus, the values of performance indices calculated for the proposed method validate that the fusion-based 3D Otsu algorithm is comprehensively superior to the other considered methods with respect to the execution time,solution quality and robustness.

The best scores for ME, Entropy, MSE, PSNR, SSIM and FSIM have been achieved by the proposed method. The low entropic score among segmented and original image shows loss of detail. In picture subdivision, the conservation of tiny feature details is a significant part, which denotes that the input entropy and thresholded image entropic score should be identical. Tables I illustrates the comparison of loss in details incurred, when the 3D Otsu-Fusion approach is formed with fused images.

The artworks offered in Figs. 4-6, illustrate the qualitative supremacy of the proposed scheme over existing approaches.The image subdivision results massively depend upon the exploitation of objective criteria. Hence, the outcomes of individual approaches are different due to the utilization of dissimilar objective constraints. Figs. 4-6 indicate the final output for all sample data at 2, 3, 5, and 8 levels of thresholding from which it can be seen that the presented scheme beats previous methods due to the preservation of local contrast.

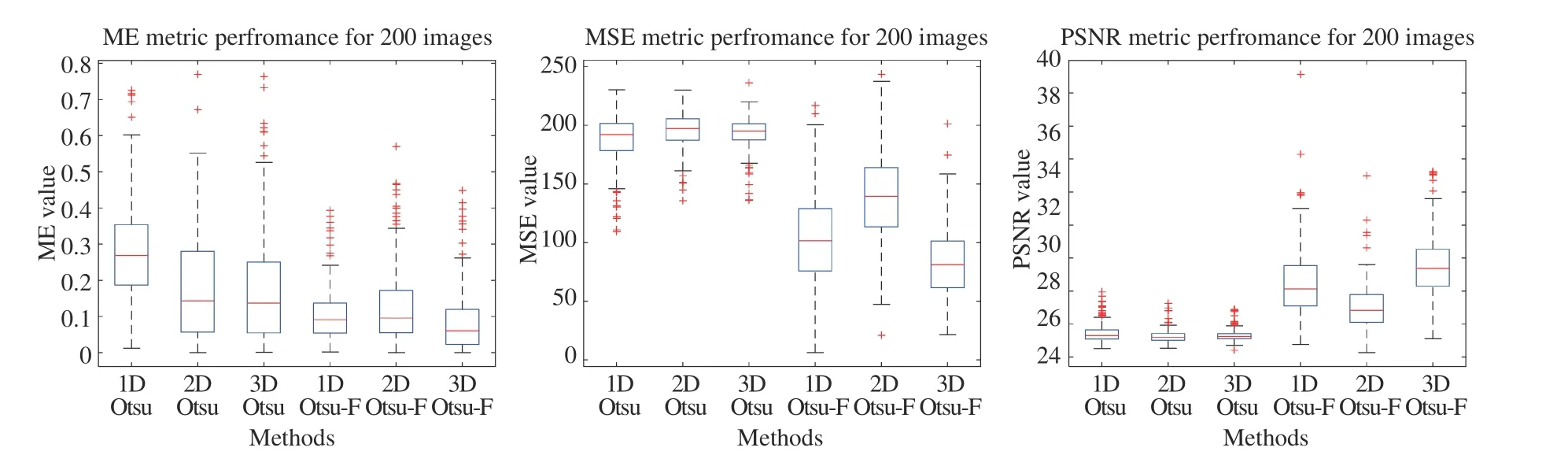

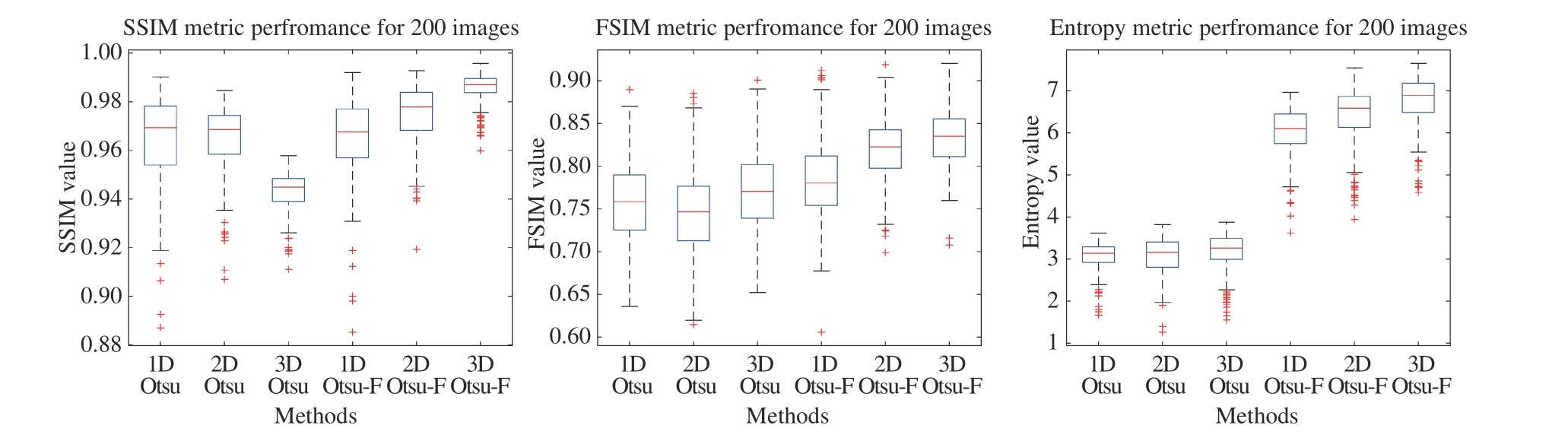

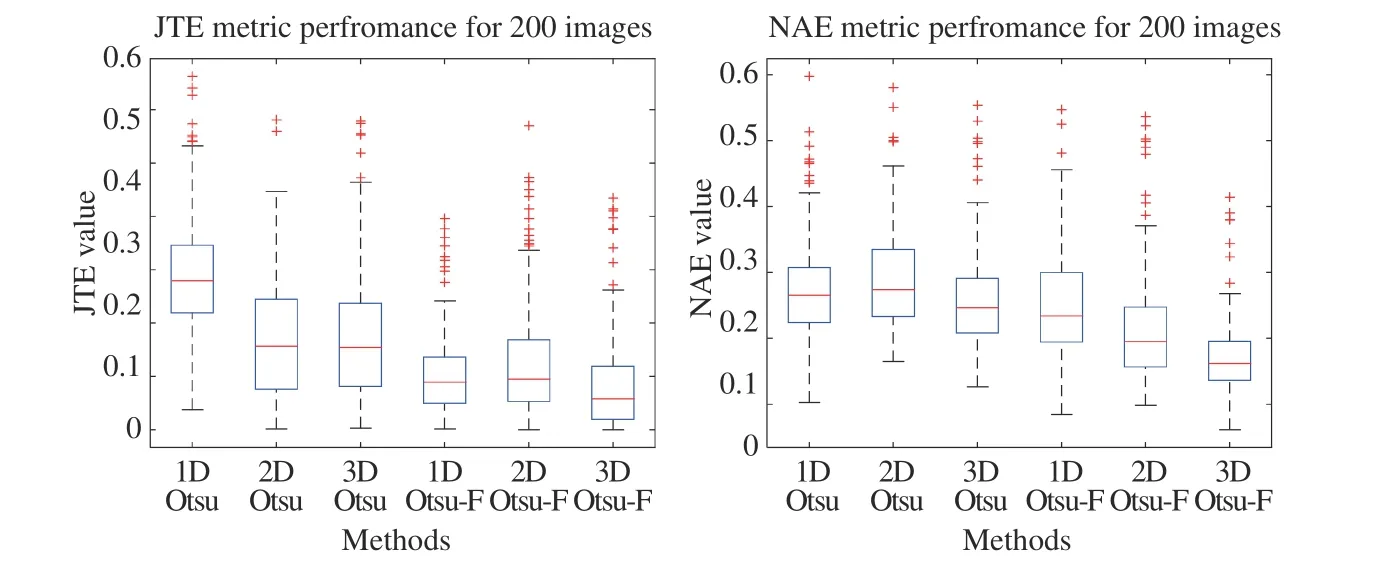

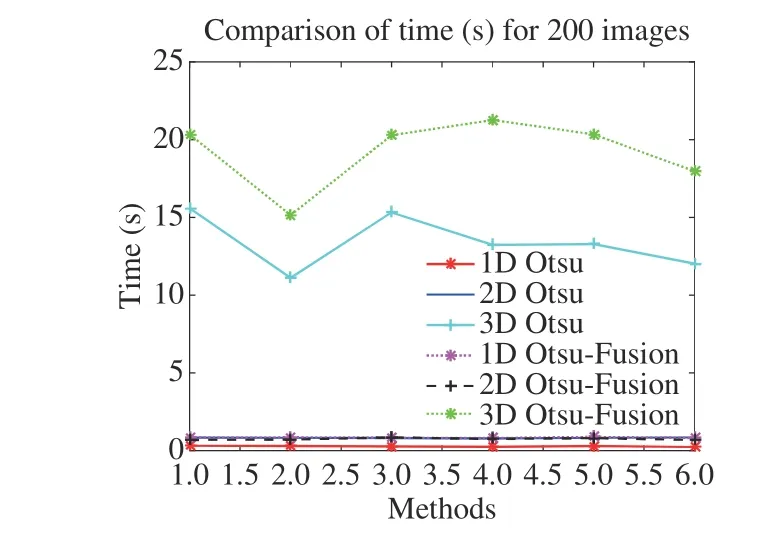

Apart from limited number of sample images, more exhaustive experimentation has been performed on the Berkeley benchmark images covering 200 diverse sample pictures. The performance metric values computed for the 200 images are presented through box plot. Six additional images have been added from the Berkeley data set and the outcomes are illustrated in Fig. 7 for 1D Otsu, 2D Otsu, 3D Otsu, 1D Otsu-Fusion, 2D Otsu-Fusion, and 3D Otsu-Fusion,respectively. From Figs. 8-10 , it is evident that the presented approach (3D Otsu-Fusion) yields better scores for ME, MSE,PSNR, SSIM, FSIM, Entropy, JTE and NTE parameters as compared to the 1D Otsu, 2D Otsu, 3D Otsu, 1D Otsu-Fusion,and 2D Otsu-Fusion, respectively. Fig. 11 also depicts the computational time for each method.

However, the proposed method takes more processing time as compared to other methods because of the nature of 3D Otsu. The calculated average performance values for ME,MSE, PSNR, SSIM, FSIM, Entropy, JTE and NTE parameters on Berkeley segmentation dataset are reported in Tables IV.From this analysis, it is obvious that the proposed 3D Otsu-Fusion method produce the finest metric values for each case in our large scale data set.

The segmented outcomes are promising and it encourages future works. The proposed method significantly increases the segmentation accuracy without disturbing actual color and information of the input data. Therefore, it can be executed in several imaging fields such as pattern recognition, pedestrian detection, surveillance tasks, medical diagnosis, and industrial implementations. The proposed 3D Otsu is not yet optimized,and there is room for further improvements to obtain better,more accurate results.

IV. CONCLUSION

Fig. 7. Visual comparison of 5-level segmentation results from BSDS200 dataset using 1D Otsu, 2D Otsu, 3D Otsu, 1D Otsu-Fusion, 2D Otsu-Fusion and 3D Otsu-Fusion respectively.

Fig. 8. Statistics-box plot for ME, MSE and PSNR metric score for 200 images for different methods.

In this paper, a novel hybrid scheme using fusion and 2D Otsu has been proposed for proficient multilevel color image segmentation. This approach is inspired from the image fusion mechanism which was exploited previously for image boosting purpose. The tactic of fusion is involves fusing the initial segmented outcome with the input data to reserve additional details for final thresholded image. This technique practices distinct segmentation procedures and their outputs have been enriched with the help of fusion. Simulation images show that fusion-based multilevel thresholding is a more straightforward process than the existing segmentation approaches. The ability of the proposed scheme has been examined through measuring ME, entropy, MSE, PSNR,SSIM, and FISM scores. Such parameters include the concurrences between the original and the segmented picture.Quantitative marks reveal that 3D Otsu-Fusion scheme yields better quality of color segmented images.

Fig. 9. Statistics-box plot for SSIM, FSIM and Entropy metric score for 200 images for different methods.

Fig. 10. Statistics-box plot for JTE and NAE metric score for 200 images for different methods.

Fig. 11. Comparison of time for each method.

TABLE IV COMPARISON OF MEDIAN VALUE FOR EACH METHOD (CALCULATED OVER 200 IMAGES FROM BSDS 200 DATASET)

IEEE/CAA Journal of Automatica Sinica2020年1期

IEEE/CAA Journal of Automatica Sinica2020年1期

- IEEE/CAA Journal of Automatica Sinica的其它文章

- Networked Control Systems:A Survey of Trends and Techniques

- Big Data Analytics in Telecommunications: Literature Review and Architecture Recommendations

- A Stable Analytical Solution Method for Car-Like Robot Trajectory Tracking and Optimization

- A New Robust Adaptive Neural Network Backstepping Control for Single Machine Infinite Power System With TCSC

- Algorithms to Compute the Largest Invariant Set Contained in an Algebraic Set for Continuous-Time and Discrete-Time Nonlinear Systems

- Asynchronous Observer Design for Switched Linear Systems: A Tube-Based Approach