What Does ChatGPT Say:The DAO from Algorithmic Intelligence to Linguistic Intelligence

By Fei-Yue Wang,, Qinghai Miao,, Xuan Li, Xingxia Wang, Yilun Lin

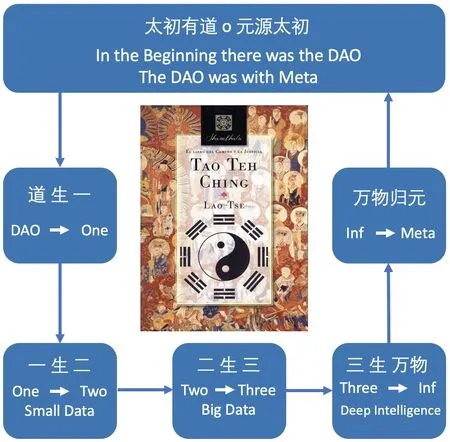

THE well-known ancient Chinese philosopherLao Tzu(老 子) orLaozi(6th~4th century BC during theSpring and Autumnperiod)started his classicTao Teh Ching《道德經(jīng)》orDao De Jing(see Fig.1) with six Chinese characters:“道(Dao)可(Ke)道(Dao)非(Fei)常(Chang)道(Dao)”, which has been traditionally interpreted as“道可道,非常道”or“The Dao that can be spoken is not the eternal Dao”.However, mordern archaeological discoveries in 1973 and 1993 at Changsha, Hunan, and Jingmen, Hubei, China,have respectively indicated a new, yet more natural and simple interpretation:“道,可道,非常道”, or“The Dao, The Speakable Dao, The Eternal Dao”.

Note that in Chinese language, word Dao has the same meaning as“Meta”in Metaverse and is identical as the Chinese words for road and journey.

In terms of Artificial Intelligence research and development,the new interpretation can be considered as a“Generalized G¨odel Theorem”, [1–3], i.e., there are three levels of intelligence: 1) The Dao or Algorithmic Intelligence (AI),2) the Speakable Dao, or Linguistic Intelligence (LI), 3) the Eternal Dao or Imaginative Intelligence (II), and they are bounded by the following relationship,

Fig.1.The DAO to Intelligence = AI + LI + II.

As illustrated in“Where Does AlphaGo Go: From Church-Turing Thesis to AlphaGo Thesis and beyond”[4], AlphaGo was a milestone in Algorithmic Intelligence with algorithms for deep, generative, and reinforcement learning.Now, Chat-GPT has being emerged as the new milestone for Linguistic Intelligence in the form of questions and answers.What does ChatGPT really say? What should we expect for the next milestone in intelligent science and technology?What are their impacts to our life and society?

Based on our previous reports[5, 6], and recent developments in Blockchain and Smart Contracts based DeSci and DAO for decentralized autonomous organizations and operations[7, 8], several workshops have been organized to address those important issues,and the main results have been summarized briefly in this perspective.

Historic Perspective

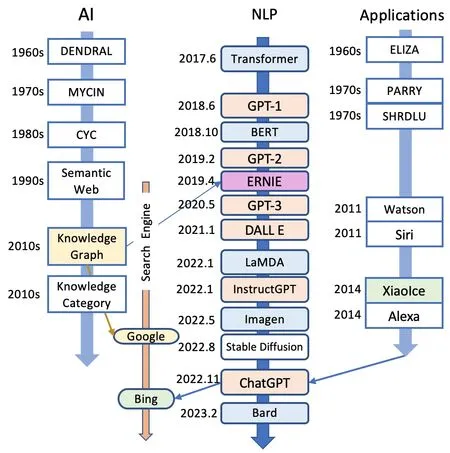

ChatGPT is the result of decades of hard work by generations in AI research and development.Early research can be traced back to Expert Systems,and in recent years it has been driven by breakthroughs in natural language processing(NLP).Fig.2 illustrates its brief history and related techniques.

Since the 1960s, people have tried to build intelligent systems, which act like human experts who can help us solve problems in a specific domain.The first attempt,DENDRAL[9],led by Feigenbaum,was a rule-based chemical analysis expert system.However, it finally faded out together with other expert systems such as MYCIN,XCON,etc.People tried to find better knowledge representations than rules.In 1980s, Lenat proposed the idea of encoding human common sense and building it into a knowledge base.This is the wellknown CYC[10] project, however, it had not been applied successfully in real-world applications.

Fig.2.A brief history of ChatGPT and related areas.

In 1990s, Berners-Lee proposed the Semantic Web[11],trying to make machines in the World Wide Web understand information and connect them through the network.But the Semantic Web didn’t meet expectations either.By 2012,Google released the Knowledge Graph and brought it into practical application through their search engine,which greatly improved the user experience.

Another route is Chatbot, which can be traced back to ELIZA[12] in 1960s and PARRY in 1970s.They respectively imitated a psychiatrist and a psychiatric patient, playing the role of psychotherapy and doctor training.SHRDLU[13] was a different framework that integrates methods such as natural language processing, knowledge representation, and planning.It was based on a virtual world of building blocks, which communicates with humans through a display to help human players build blocks.The next 30 years were uneventful,but the accumulation of knowledge base (graph) laid the foundation for subsequent leaps.As the scale of knowledge graphs increases, the capabilities of the question answering system begin to attract attention.In 2011, IBM Watson[14]defeated the top human players inJeopardy!In the same year,Siri,as a widely used conversational assistant,entered the lives of ordinary people through the iPhone.

Although Microsoft started a little late, it launched XiaoIce[15] in 2014, demonstrating its capabilities in multiple AI fields.Today, Microsoft integrated ChatGPT into Bing,replaced the knowledge graph with a pre-trained large model.Through the binding of ChatGPT with Bing, Microsoft is redefining the search engine.

From the semantic network to the knowledge graph, the symbol-based knowledge system has not brought revolutionary applications.In recent years, another technical route, that is,the pre-trained large model based on deep networks(especially Transformers) is making great progress.In 2018, OpenAI released the GPT-1.By 2020,the number of GPT-3 parameters has reached 175 billion, and the subsequent GPT-3.5 has become the basis of ChatGPT.Almost at the same time,Google’s BERT series continued to expand.Multi-modal pretraining large models are also developing rapidly.A series of AIGC (AI Generated Content) projects such as DALL·E,Imagen(Video),and Stable Diffusion are vigorously promoting AI development into a new era at a new perspective.This wave of technology will promote and speed up the development and application of digital human techniques.

The Impact

Right now, ChatGPT is one of the biggest stories in tech.This new tool unlocks the creativity lying dormant in an untapped wave of makers that find unexpected ways to showcase their imaginative abilities.For instance,ChatGPT can respond in different styles, and even different languages.if you didn’t know it was AI behind, it could easily be mistaken for a chat with a real human.Outside of basic conversations,people have been showcasing how it is doing their jobs or tasks for them - using it to help with writing articles and academic papers, writing entire job applications, and even helping to write code.What is more, Microsoft successfully integrated OpenAI’s GPT software into the Bing search engine.

While ChatGPT is a great help,it’s worth taking everything with a grain of salt.ChatGPT is“not particularly innovative,”and“nothing revolutionary“, says LeCun, Meta’s chief AI scientist.Coincidentally,ChatGPT has gotten under the skin of many commentators.Among them, apparently, is Elon Musk,the CEO of Twitter and one of the founders of OpenAI,which created ChatGPT.Elon has recently criticized several of the chatbot’s answers.In addition, Stack Overflow has temporarily banned users from sharing answers to coding queries generated by ChatGPT, because the average rate of getting correct answers from ChatGPT is too low.This is all because ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers.

The ChatGPT community indicates that supervised training misleads the model because the ideal answer depends on what the model knows, rather than what the human demonstrator knows.The main reason for this phenomenon is that RLHF,reinforcement learning by human feedback[16],does not work well.ChatGPT is trained using reinforcement learning with human-in-the-loop, 40 human labelers to be exact.Traditional chatbots only view this research as a CPS (cyber-physical systems) problem, that is, a system in which computing (or networking) resources are tightly integrated and coordinated with physical resources.However, the theory of CPS is no longer adequate for dealing with human-in-the-loop systems(ChatGPT).It is important to integrate human performance and model performance organically and subtly in order to enable humans to provide more effective feedback.In order to make chatbots systems operate efficiently and effectively, we consider it necessary to introduce the concept of CPSS [17](Cyber-Physical-Social Systems),which incorporates both human and social factors.In the future,any research on AI-based chatbot systems must be conducted with a multidisciplinary approach involving the physical,social,and cognitive sciences,and CPSS will be key to the successful construction and deployment of such systems[18].

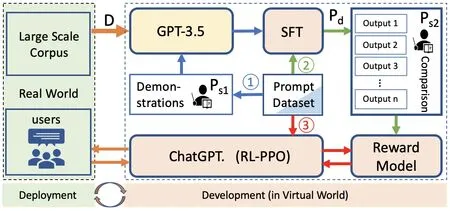

Fig.3.Principle of ChatGPT from perspective of Parallel Learning.

ChatGPT and Parallel Intelligence

Since the end of 2022, ChatGPT has shocked almost every corner of the world.So, what exactly is ChatGPT? Why is it so powerful? How was it born?

First of all, ChatGPT is a Transformer-based language model that is pre-trained based on a large amount of text corpus and then fine-tuned based on specialized data[16].This enables it to generate coherent and contextually appropriate text, capable of multiple tasks such as text completion, text generation, and conversational AI, etc.

The resources to train ChatGPT include a pre-trained language model (GPT-3.5), a distribution of prompts, and a team of labelers.As shown in Fig.3, the methodology comprises three steps.Step 1 (blue arrows) is to fine-tune the GPT-3.5 model with demonstration data from human labelers based on sampled prompts.The result is the Supervised Fine-Tuned(SFT) policy.Step 2 (green arrows) starts from feeding a prompt to SFT and gets multiple outputs from it.Then the outputs are ranked by human labelers to construct a comparison dataset, which is used to train a Reward Model (RM).Then,Step 3 (red arrows) starts from the supervised policy with a new prompt, succeeded by a reinforcement leaning process with rewards from the RM trained in Step 2.Step 2 and Step3 are iterated continuously to get better polices.

From the perspective of Parallel Learning and Parallel Intelligence[19–26], the ChatGPT methodology is a double-loop pipeline with one descriptive learning,one predictive learning,and two prescriptive learning.As shown in Fig.3, the real world, where a huge amount of text corpus is collected, and the artificial world, where demonstration data are generated to train a policy with RLHF, run in parallel.The descriptive leaning(Din Fig.3)is the mapping from the real world to the artificial world,and the result is the pretrained language model GPT-3.5, which can be regarded as an implicit knowledge base about the world.Although GPT-3.5 is powerful, it needs guidelines to perform in the way human users wanted, and that’s why we take three steps introduced above.Step 1 can be seen as the first prescriptive learning (Ps1) process, in which the policy is aligned with human demonstrations.Step 2 starts with predictive learning (Pd) by producing multiple outputs, and ends with prescriptive leaning (Ps2) to rank the outputs by incorporating human preference.Step 3 is actually an automated process of Step 2 using reinforcement learning with the Reward Model.Steps 2 and 3 form the inner loop to iteratively optimize the policy with increasing comparison data.In addition, the interaction between development and deployment is the outer loop with feedback from social users(instead of labelers).

The DAO to the Future of Linguistic Intelligence

We can travel back to the future of linguistic intelligence by studying the history of language.Language cannot exist without human collaboration.A plethora of new words and hot stems have emerged in response to the ever-increasing complexity of communication needs.Machine linguistic intelligence,on the other hand,is more of a symbiotic relationship,relying on the development of the Internet to achieve a highly human-like conversational experience based on the massive absorption of human linguistic information while lacking the ability to generate new concepts.

To overcome this limitation and empower AI with the same concept creation capabilities as humans, machines must have the same communication requirements as people.The formation of organizations is the primary driver of communication needs.In production environments, IOT (Internet of Things)devices, web crawlers, distributed storage, machine learning tools, and other components designed by architects have long been organized centrally.As the complexity of intelligent systems continues to increase,their design pattern is gradually shifting from single program multiple data(SPMD)to multiple program multiple data (MPMD) [27].

This centralized, predetermined organization is not, however, the most prevalent type of organizations in human society.In contrast, the vast majority of human society’s systems, such as political and economic systems, are decentralized and federated.AI systems have not been structured in this manner because their task processes are relatively fixed and arbitrated by third parties.Nonetheless, as tasks become more complex, particularly as they evolve from linguistic to imaginative intelligence, task processes must frequently be customized and allow for human involvement throughout, and it is difficult to find enough arbitrators.In the Film Industry,for instance, directors must consider integrating the script,shots, and soundtrack, and communicate with the producer in a timely manner throughout the process.With current endto-end solutions, it is difficult to accomplish such tasks, and it is also challenging to train models using human feedback,as appreciation of such artwork varies widely.

Decentralized autonomous organizations (DAOs)[18, 28,29] are a promising solution to this issue.Decentralization could facilitate an evolution in the organizational structure of intelligence.Research has discovered that, unlike the current intelligent systems designed by human architects, selforganized intelligent systems can be freely combined in a competitive manner, resulting in a more flexible and efficient architecture[27, 30].In addition, DAO contributes to a shift in the interaction between AI and humans.AI can currently only interact with humans as a final product,and participation in the training process requires specialized knowledge.DAO can provide a simple and diverse incentive structure for this.As we have observed in the financial markets, AI and AI, as well as AI and its human creators,can collaborate for rewards in DAOs in response to the constantly varying demands in high frequency.In fact, traditional AI research topics such as federated learning[31], model aggregation [32] and multiagent systems [33] have explored this area, and the DAO community has already adopted numerous concepts.We believe the next step in the development of linguistic intelligence will involve combining DAOs to achieve self-organization and human compatibility.

Experts familiar with large-scale models are aware that ChatGPT lacks revolutionary technical advantages, and there are still many obstacles to commercial deployment.Nonetheless, we are pleased with ChatGPT’s growing popularity,as it will benefit large-scale model research and, hopefully,revolutionize linguistics intelligence research and the coming Intelligent Industries or the Age of Industries 5.0.

ACKNOWLEDGMENT

This work was partially supported by the National Key R&D Program of China (2020YFB2104001), the National Natural Science Foundation of China (62271485, 61903363,62203250, U1811463).We thank Drs.Yonglin Tian, Bai Li,Wenbo Zheng and Wenwen Ding for their participation and disscussion in our Distributed/Decentralized Hybrid Workshops (DHW).

IEEE/CAA Journal of Automatica Sinica2023年3期

IEEE/CAA Journal of Automatica Sinica2023年3期

- IEEE/CAA Journal of Automatica Sinica的其它文章

- Meta-Energy: When Integrated Energy Internet Meets Metaverse

- Cooperative Target Tracking of Multiple Autonomous Surface Vehicles Under Switching Interaction Topologies

- Distributed Momentum-Based Frank-Wolfe Algorithm for Stochastic Optimization

- A Survey on the Control Lyapunov Function and Control Barrier Function for Nonlinear-Affine Control Systems

- Squeezing More Past Knowledge for Online Class-Incremental Continual Learning

- Group Hybrid Coordination Control of Multi-Agent Systems With Time-Delays and Additive Noises