Target recognition algorithm via kernel sparse representation based on supervised dictionary learning

SUN Dawei, WANG Shicheng, YANG Dongfang, LIU Yuan, LI Yongfei

(Rocket Force University of Engineering, Xi’an 710025, China)

Abstract: Aiming at the problem of target classification in the case of highly nonlinear image, a novel target recognition algorithm via kernel sparse representation based on supervised dictionary learning was proposed under the framework of sparse representation classification, in which the covariance descriptor with multifeatures information was used as image descriptors. By introducing kernel tricks, the nonlinear image data could be linearly separable in high-dimensional space. The classification errors and reconstruction errors were introduced into the objective function simultaneously. Based on these, the learned dictionary was more discriminative under the framework of supervised dictionary learning. Experimental validations using the UCMerced remote sensing dataset (provided by University of California, Merced) and self-captured infrared vehicle datasets demonstrate that the average recognition rate of this algorithm on these two data sets reaches 89.46% and 93.98%, respectively, which verifies that the proposed algorithm achieves high-precision classification of nonlinear separable objects.

Key words: object recognition; sparse representation; covariance descriptor; dictionary learning; kernel trick;image classification

Object recognition in computer vision was generally transformed into image classification task. In the past few years, sparse representation had dramatically attracted researchers’ attention for image classification such as face recognition[1], object detection[2], scene classification[3],traffic sign recognition[4], and auto target recognition[5].Sparse representation classification (SRC) algorithm framework directly utilized the training sample as a dictionary, and supposed that a query image was linearly represented by the atoms from the same class. The two phases of SRC were sparse coding and classification.Sparse coding was described as anconstraint optimization problem, and the minimal residual errors were used for classification. However, SRC utilized all training sample as a dictionary, and it was time consuming to sparse representation signal on such dictionary when the training sample set was larger.

To derive a compressed dictionary, many dictionary learning (DL) algorithms were proposed[6-9]. K-SVD algorithm[7]was an algorithm to learn an overcomplete dictionary for sparse representation. A variation of the method added a discriminative reconstruction constraint term and optimized both class discrimination and sparse reconstruction components based on K-SVD[8]. These methods didn’t directly use the label information in their optimization models, so they were called unsupervised dictionary learning. These methods emphasized signal representation, and were not suitable for classification tasks originally. Later, supervised dictionary learning methods were proposed. Discriminative K-SVD (D-KSVD)[9]was one well-known supervised dictionary learning method. The classification error term was incorporated into the objective function based on extending K-SVD algorithm. The linear classifier was utilized for predicting the query image’s label in D-KSVD method.

Supervised dictionary learning based classification performed well for linear classification. Nevertheless, the datasets were usually highly non-linear. Gao, et al. in [10]proposed kernel sparse representation classification (KSRC)for non-linear classification. Kernel trick was used to map the non-linear features into a high dimensional space, in which the features were linearly separable. In this paper, a kernel sparse representation classifier was proposed for object recognition, which was different from the reconstruction errors utilized in [10]. It emphasized signal classification not signal representation.

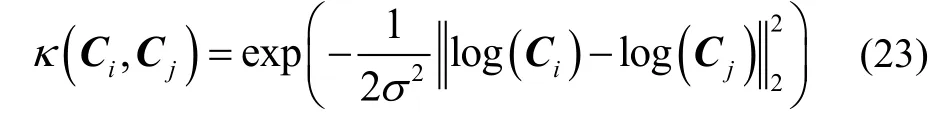

Furthermore, a covariance descriptor was utilized for image description. Covariance descriptor was proposed[11]for texture classification and matching. There were some advantages of covariance descriptor: Fusing multiple features; low dimensional compared to other region descriptors; being scale and illumination invariant. The covariance descriptor lies in a Riemannian manifold, so it can’t be directly used for machine learning. The covariance matrices are symmetric positive definite (SPD), consequently, log-Euclidean metric was used to approximate the distances between any covariance descriptors[12-13].

To overcome the drawbacks of SRC framework and unsupervised dictionary learning methods, a supervised dictionary learning kernel sparse representation classification algorithm was proposed for object recognition. The covariance descriptor was utilized for image description,and linear classification error term was incorporated into objective function for object recognition. This paper was organized as follows. In section 1, the related works about covariance descriptor and image classification based on kernel sparse representation were described. In section 2,the methodology of the proposed algorithm was described.Experiments were performed in section 3 using different databases to prove the validity of the proposed method.The conclusions were given in section 4.

1 Related works

1.1 Covariance descriptors for images

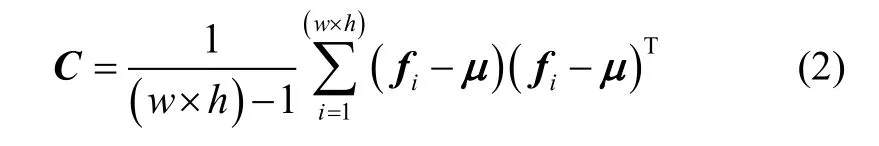

To better understand the concept of covariance region descriptors, here was a simple example. Letdenote an image. To extract the image’s covariance descriptor, firstly, ad-dimensional feature vector was designed using the Gabor features with 5 scales in 4 directions. Thus, each pixel of the image was characterized by a one-dimensional column vector as follow:

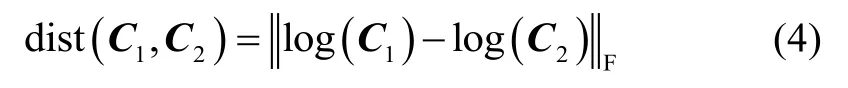

Covariance descriptor was a SPD matrix which lied in a Riemannian manifold. The distance of covariance descriptors could be calculate as in [12]using the log-Euclidean metric:

1.2 Sparse representation classification

SRC[1]is based on that a query image lies in the space spanned by the training samples, and it can be linear combination of the training samples from the same object class. Given the originald-dimensional training samples from classes of objects can be denoted aswheretraining samples subset from classi. SRC method directly utilizesas a dictionarybe a query (test) sample from thei-th class, and the SRC framework can be described as follows:

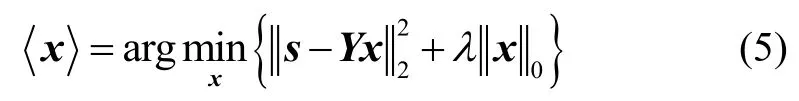

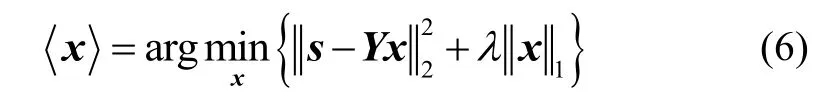

Firstly, carry out sparse codingby-norm minimization:

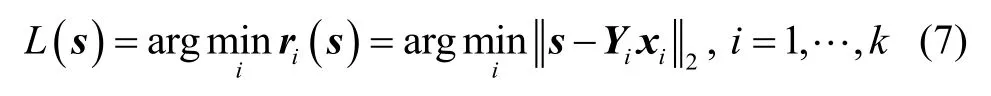

Secondly, do classification via reconstruction errors minimization:

SRC utilizes all training samples as a dictionary. To represent the test sample correctly, a large training set is needed. Therefore, DL methods are proposed to learn a compact dictionary.

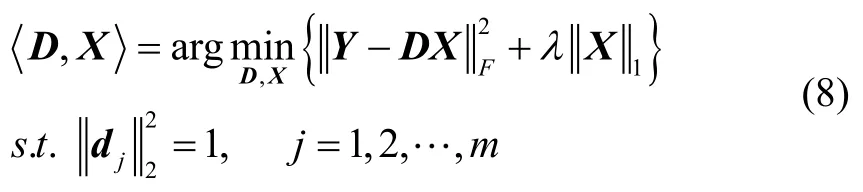

1.3 Dictionary learning model

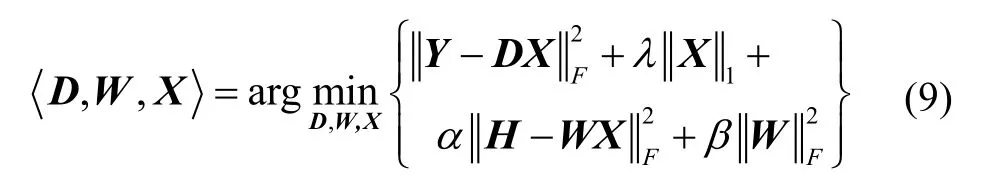

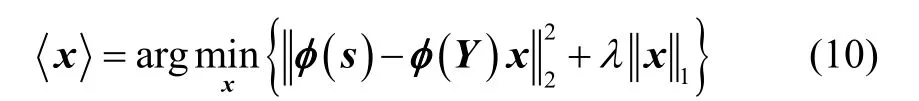

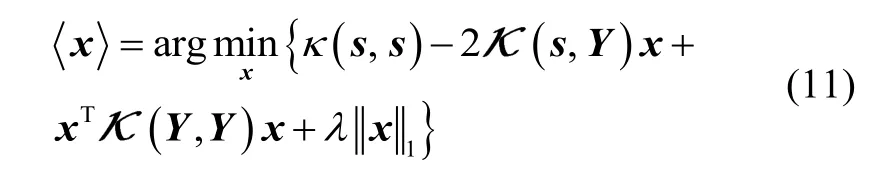

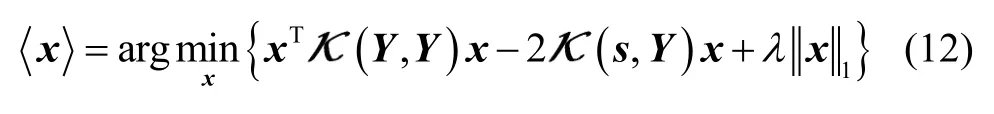

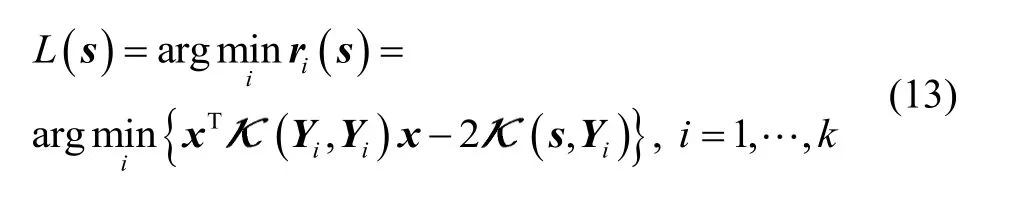

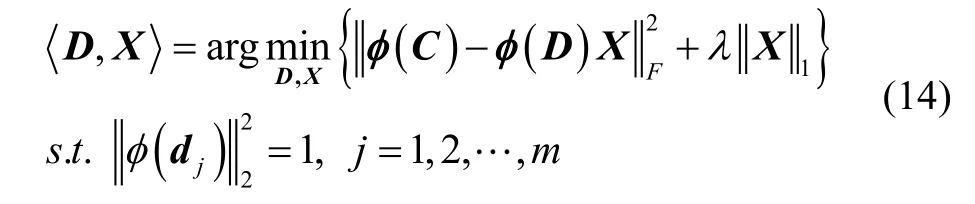

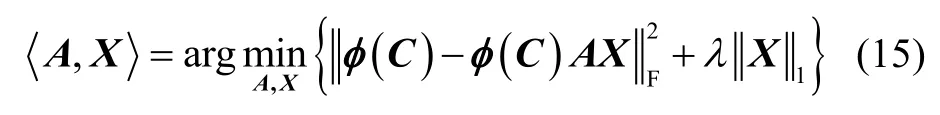

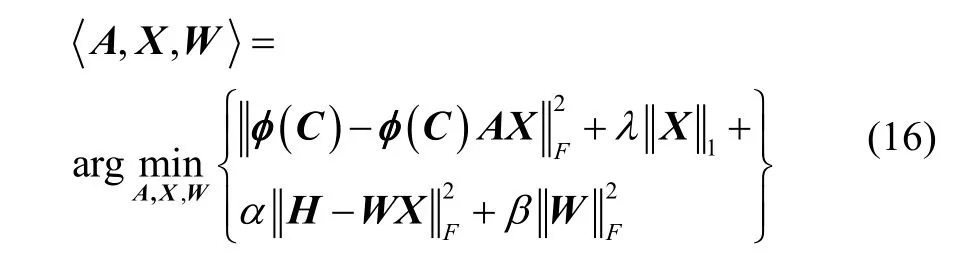

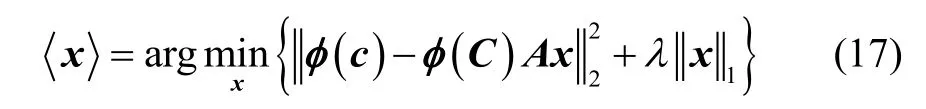

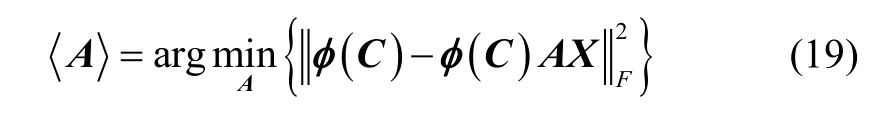

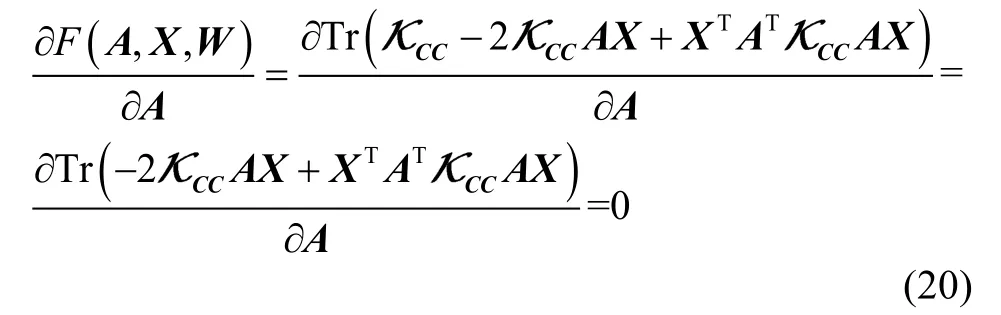

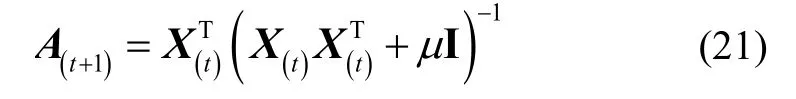

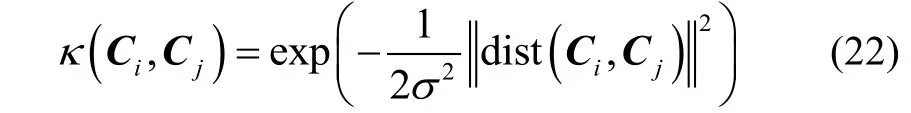

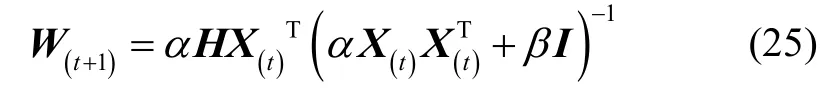

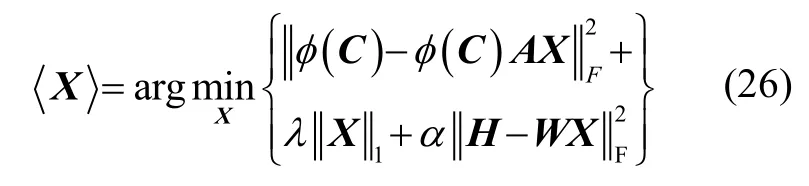

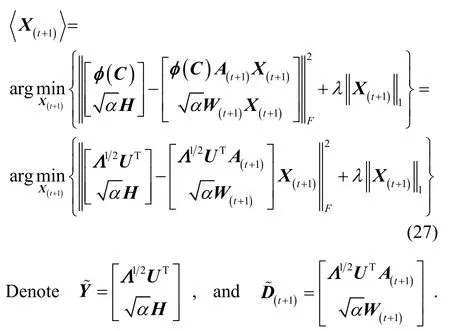

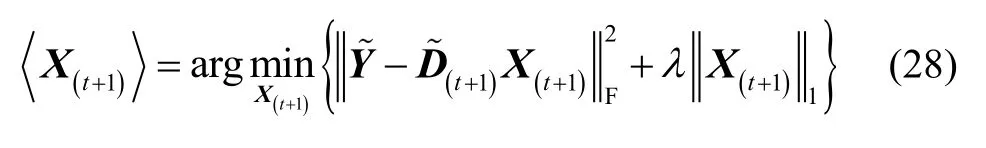

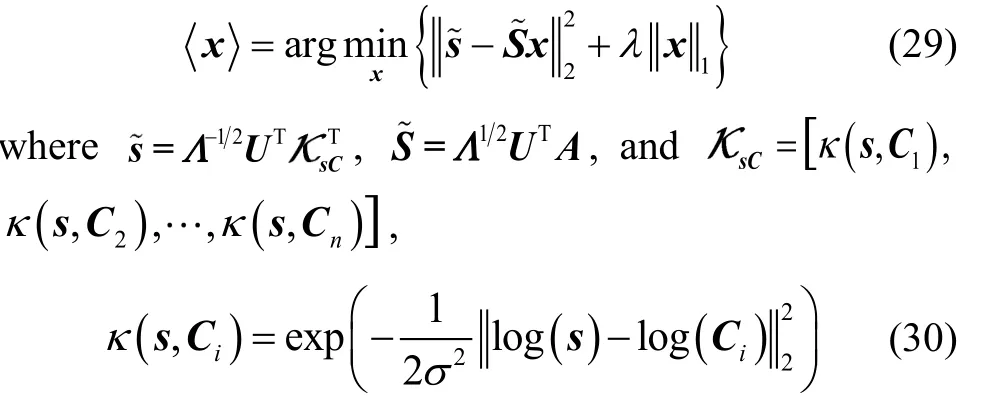

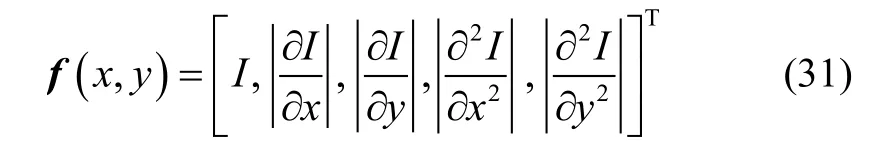

Unlike the above mentioned dictionary, DL is to learn a corresponding compact dictionarycolumns referred as atoms (m The SRC framework is suitable for linear classification. However, data are usually non-linear in practice.Kernel trick can translate the data from a low-dimensional space into a high dimensional space where data can be classified linearly. Then, the high dimensional transformation of SRC is formulated as In this section, a supervised dictionary learning based kernel sparse representation classification algorithm for object recognition utilizing covariance descriptor was proposed. The innovations of the proposed method include three aspects: covariance descriptor is used for feature representation to fuse multiple features;kernel trick is incorporated to solve the non-linear inseparable problem; supervised dictionary learning is utilized to learn a compact dictionary, and simultaneously a classifier is trained. To make the dictionary more discriminative, the classification error term is incorporated into the objective function. The supervised dictionary learning based kernel sparse representation classification model can be written as follows: The procedure mentioned above is dictionary and classifier learning. To classify a test samplesparse coding can be represented as Then, the label vector of the test sample can be obtained as follow: And the index corresponding to the largest value ofis label of the test sample. Ultimately, the object recognition problem is transformed into optimizing the objective functions Eq. (16) and Eq. (17). There are three variable matricesin the objective function Eq. (16) which is denoted asThe objective functionis convex when one of them is varied and the other two variables are fixed. Hence, an alternate iterative optimization algorithm is used for the variables computing.Here, denote thet-th and (t+1)-th iterations as subscript(t) and (t+1), respectively. Then Eq. (26) can be rewritten as This is a standard sparse representation problem,and sparse solver such as SPAMS[18]can be employed to solve Eq. (28). The optimization procedure mentioned above is the dictionary learning and classifier learning process. Then,the classification process also includes two items: Kernel sparse coding and classification. Given a covariance descriptor of the test sample, the kernel sparse coding process is as follows: Then, the label of the test samplecan be obtained by Eq. (18). The procedures of the proposed algorithm are summarized in Table 1. Tab.1 The flow of the proposed algorithm In this section, the proposed method was further discussed for object recognition experiments with the UC Merced remote-sensing image dataset[19]and Infrared Vehicles dataset[20]. All the experiments were run on Matlab R2014a. The PC had an Intel Corel i5 2.30GHz CPU and 4GB RAM. The color images of UC Merced database[19]were extracted from the USGS National Map Urban Area Imagery. Each image is 256×256 pixels. The geographic image database contains 21 scene categories with 100 images per class. The 21 classes shown in Fig.1 in sequence are agricultural, airplane, baseball diamond,beach, buildings, chaparral, dense residential, forest,freeway, golf course, harbor, intersection, medium residential, mobile home park, overpass, parking lot, river,runway, sparse residential, storage tanks and tennis court. Fig.1 Samples from the UC Merced database with 21 classes There are totally 2100 samples in this dataset for 21 classes, and they are not enough for machine learning.The images are vertical view and rotation invariant, so we rotate every image by 90° and 180° respectively to expand the dataset. Then, there are 300 samples per class after the expansion. To reduce the computing cost, the color images are transformed to gray images firstly, and the images are zoomed to 64×64 pixels. At each pixelof an image, a 5-dimensional feature vector is computed: The proposed algorithm is affected by six parametersEach parameter is investigated by fixing the others. For the dataset used in the experiments with UC Merced dataset, parameter Fig.2 Classification accuracy rate versus dictionary size To determine the dictionary sizeis set for different values. The results are shown in Fig.2. When the dictionary size105, the accuracy recognition rate is up to 90.32%. Fix one ofand investigate the other parameters. The results are shown in Fig.3. Ultimately, we set Fig.3 Evaluation of the effect on the recognition rate for parameters To validate the proposed method, it is compared with SRC[1]and KSRC[10]. With similar experiment setup,experiments were repeated for ten groups. The classification accuracy rates of the three methods were shown in Table 2. SRC and KSRC methods are all unsupervised classification algorithms, the difference between the two methods is that SRC used dataset in original space while KSRC transforms the dataset to high dimensional space.And the dictionary size being used for SRC and KSRC is 5040, while a compact dictionary with 105 atoms is used for the proposed method. Tab.2 The classification accuracy rates (%) of different methods with UC Merced dataset From the Table 2, it is shown that KSRC outperforms SRC, because the kernel transformation makes the dataset more linear separable. The mean recognition rate of the proposed method is up to 89.46%, and it is the highest of the three methods. In the proposed method,kernel transformations and supervised dictionary learning are used simultaneously. To validate that the method is superior, and the recognition rates of the three methods are further shown in Fig.4 for every category. It’s easy to see that the proposed method outperforms the methods for comparison. Fig.4 Comparison of recognition rate for every class using SRC, KSRC and the proposed method Fig.5 shows the confusion matrix of the proposed method in one experiment. The vertical labels are the true labels, while the horizontal labels are the predicted labels. The diagonal elements of the matrix are the recognition rates for every class, while the other elements are the confusion probabilities. The recognition rate of 17 classes is more than 80%, and even two categories are recognized absolutely. However, the recognition rate of overpass is less than 70%. From the con- fusion matrix,we can see some misclassification couples, for examples,dense residential/intersection, baseball diamond/golf course, beach/river, and runway/overpass. Even these couples are difficult for human’s eyes to recognize. In this subsection, another experiment was designed to further validate the proposed method for object recognition with Infrared Vehicles dataset[21]. The real infrared image targets are seven categories vehicles.Samples from this dataset are shown in Fig.6. From left to right, they are electric tricycle, electric bicycle, bus, truck,van, sedan, and three-wheeled car. There are 100 samples per category, and each sample is 160×128 pixels. To reduce the computing cost, the images were zoomed to 40×32 pixels, and every image was rotated by 90° and 180° respectively to expand the dataset. At each pixelof an image, a 5-dimensional feature vector was computed as shown in Eq. (30). Fig.6 Samples from the Infrared Vehicles database with 7 classes The parameters selection method is the same as the aforementioned experiment. The tuned parameters areFor each category, 80% samples of the dataset were randomly chosen as training set, and the rest 20% samples as testing set. The experiments were repeated for ten groups, and the results were shown in Table 3. Tab.3 The classification accuracy rates (%) of different methods with Infrared Vehicles dataset The mean recognition rate of the proposed method is up to 93.98%. Table 3 demonstrates that the proposed method outperforms the methods for comparison for object recognition. In this paper, a supervised dictionary learning based kernel sparse representation classification algorithm for object recognition using covariance descriptor was proposed. In this method, the covariance descriptor with multi-features information was used as image descriptors;By introducing kernel trick, nonlinear image data could be linearly separable in high-dimensional space; and the classification errors and reconstruction errors were introduced into the objective function simultaneously.Under the framework of supervised dictionary learning,the learned dictionary was more discriminative. The superior performances of the proposed method were shown on UC Merced remote-sensing dataset and Infrared Vehicles dataset. The results show that the proposed method is more effective for object recognition.Supervised dictionary learning can learn a compact dictionary and a classifier simultaneously. Kernel trick can make the nonlinear data more linearly separable in high dimensional space.

1.4 Kernel sparse representation classification

2 Methodology of the proposed algorithm

2.1 Supervised dictionary learning based kernel sparse representation classification modeling

2.2 Optimization procedure

3 Experiments results and analysis

3.1 UC Merced dataset and preprocessing

3.2 Parameters selection

3.3 Experiments with UC Merced dataset

3.4 Experiments with Infrared Vehicles dataset

4 Conclusions