DEEPNOISE:Learning Sensor and Process Noise to Detect Data Integrity Attacks in CPS

Yuan Luo,Long Cheng,Yu Liang,Jianming Fu,Guojun Peng,*

1 School of Cyber Science and Engineering,Wuhan University,Wuhan 430072,China

2 Key Laboratory of Aerospace Information Security and Trusted Computing,Ministry of Education,Wuhan 430072,China

3 School of Computing,Clemson University,USA

4 Tencent Technology Shenzhen Company,Shenzhen,China

Abstract: Cyber-physical systems (CPS) have been widely deployed in critical infrastructures and are vulnerable to various attacks.Data integrity attacks manipulate sensor measurements and cause control systems to fail,which are one of the prominent threats to CPS.Anomaly detection methods are proposed to secure CPS.However,existing anomaly detection studies usually require expert knowledge (e.g.,system model-based)or are lack of interpretability(e.g.,deep learning-based).In this paper,we present DEEPNOISE,a deep learning-based anomaly detection method for CPS with interpretability.Specifically,we utilize the sensor and process noise to detect data integrity attacks.Such noise represents the intrinsic characteristics of physical devices and the production process in CPS.One key enabler is that we use a robust deep autoencoder to automatically extract the noise from measurement data.Further,an LSTM-based detector is designed to inspect the obtained noise and detect anomalies.Data integrity attacks change noise patterns and thus are identified as the root cause of anomalies by DEEPNOISE.Evaluated on the SWaT testbed,DEEPNOISE achieves higher accuracy and recall compared with state-of-the-art model-based and deep learningbased methods.On average,when detecting direct attacks,the precision is 95.47%,the recall is 96.58%,and F1 is 95.98%.When detecting stealthy attacks,precision,recall,and F1 scores are between 96%and 99.5%.

Keywords: cyber-physical systems; anomaly detection;data integrity attacks

I.INTRODUCTION

Cyber-physical systems (CPS) have been widely deployed in various applications,such as industrial control systems (ICS),smart grid,and intelligent transportation systems.The CPS market is expected to grow by 9.3%every year from 2019 to 2027[1].Critical infrastructures rely on CPS to provide automated and intelligent service to users.With the growing connectivity between cyberspace (e.g.,the Internet) and physical space,CPS expose an expanding attack surface to attackers.Attacks on CPS can cause not only out of service of critical infrastructures but also loss of physical equipment and even human lives.For example,the Ukrainian Power Grid attack caused around 225,000 users to lose electrical power[2].Malfunctioning robots and safety incidents of ICS[3,4]endangered the lives of workers and residents.

Meanwhile,one typical yet devastating attack targeting sensor measurement data in CPS-data integrity attack-has been studied recently[5-9].Data Integrity Attacks(DIA)aim to manipulate sensor measurements in CPS and can disable controllers,which can be conducted through communication channel tampering or physical channel spoofing(details of definition in Section III).For example,false data injection[10]attacks and spoofing attacks[11]could tamper with sensor measurements and cause control systems to send wrong commands.To detect such attacks,an increasing number of anomaly detection methods have been proposed[12,8,13,7,14].

Since it is the interaction with the physical world that makes CPS different from conventional IT systems,the physical properties of CPS are utilized to detect attacks[12].A system model is usually created from physical observations(e.g.,sensor measurements and control commands)to determine if the predicted system state is normal[12](the difference between real sensor values and expected values is in a normal range).For example,Mujeebet al.[8]used a Kalman filter to extract sensor and process noise to detect data integrity attacks.However,physics-based methods have two major limitations:(1)expert knowledge is needed for CPS(e.g.,architectures)and system models (e.g.,knowledge of system models),and (2)applying models(e.g.,the basic Kalman filter)is subject to certain restrictions (e.g.,CPS should be linear systems).

Deep learning-based methods are proposed to detect anomalies in CPS[13].The unsupervised-learning and data-driven strategy reduce the human effort to understand underlying CPS system dynamics.Also,the temporal dependence of time-series measurements and inter-correlations of components,which are essential in CPS,can be captured through different neural models[15](e.g.,Long Short-Term Memory(LSTM),Convolutional Neural Network(CNN)).Despite the potential to detect anomalies,the cause of anomalies (e.g.,attack sources and types) is still unknown[13,16].Users may still do not know where anomalies are from.Thus,it will be difficult to adopt appropriate mitigating approaches to solve the root problem.

Mujeebet al.[8,17]and Aoudiet al.[7]proposed to use sensor and process noise to detect data integrity attacks.Sensor and process noise is defined as the residual value between system states of CPS and measurement data from physical sensors.Sensor noise is generated due to manufacturing imperfections and varies from sensor to sensor.Process noise is produced because of the impact of the physical production process,e.g.,engine vibration.Such noise can be used as the fingerprint of sensors and processes.Data integrity attacks that change sensor measurements,regardless of cyber attacks or physical damage,will likely change sensor and process noise contained in measurement data.However,existing noise-based anomaly detection methods mainly rely on system models to extract the noise[12].Thus,they share the same limitations with physics-based anomaly detection methods as discussed above.

In this paper,we propose a novel anomaly detection method,called DEEPNOISE,to detect data integrity attacks(e.g.,sensor spoofing attacks)in CPS by extracting sensor and process noise.The key observation is that data integrity attacks will eliminate or change the distribution of sensor and process noise contained in sensor measurements.Through extracting and learning the sensor noise in a normal period,data integrity attacks can be detected when such noise has deviated from the learned pattern.To this end,DEEPNOISE extracts noise automatically through a neural network named Robust Deep Autoencoder (RDA)[18]in the normal operation stage of CPS.Then,the extracted noise is fed to an LSTM-based[19,20]anomaly detector to learn the temporal dependence of noise.Also,we use such a detector to monitor sensor measurements(after removing noise).Finally,if noise patterns have been changed,data integrity attacks are identified when anomaly detectors generate large prediction errors.Once the root cause (i.e.,data integrity attacks)is identified,appropriate countermeasures(e.g.,PLCs could check received sensor measurement data)can be adopted.

In particular,we highlight important differences between DEEPNOISE and other anomaly detection techniques in CPS.

·DEEPNOISEdiffers from physics-based system model[12]methods due to(1)data-driven,which means users do not necessarily need to have an indepth understanding of CPS architectures,system dynamics,and the system models; (2) generality,which means we do not assume prior requirements about the characteristics of systems (e.g.,linear systems)and the distribution of noise(e.g.,Gaussian distribution).

·DEEPNOISEdiffers from existing deep learning-based methods[13]due to(1)better interpretability in CPS.The sensor and process noise is an essential characteristic of CPS.(2) identifying the root cause of an anomaly.We pinpoint that anomalies are caused by data integrity attacks on a certain sensor within a certain period.Thus,users can take appropriate mitigating methods.

Our contributions are summarized as follows:

·New methodology.We explore to extract sensor and process noise with Robust Deep Autoencoders in cyber-physical systems.Our study reveals that it is feasible,which provides a more ubiquitous and effortless noise extraction method in CPS.

·New techniques.We developed DEEPNOISE to automatically extract the intrinsic sensor and process noise to detect data integrity attacks in CPS.We demonstrate the effectiveness of DEEPNOISE to detect direct and stealthy attacks on a realistic CPS testbed.The results suggest that DEEPNOISE achieves higher accuracy and recall compared with state-of-the-art methods.

II.BACKGROUND

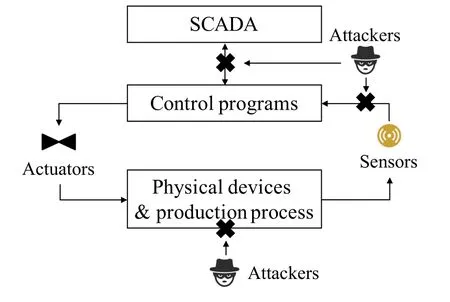

2.1 The CPS Model

As illustrated in Figure 1,we introduce a generic architecture of CPS.Control programs,which are monitored by users through Supervisory Control And Data Acquisition (SCADA) systems,obtain sensor measurement data and send corresponding commands to actuators.Once actuators receive control signals,certain operations (e.g.,turn on a pump) are carried out.Physical devices take corresponding actions and the production process is running to provide services.Sensors are deployed to collect measurement data.Finally,sensor measurements are transmitted to control programs to help decide the next command.Data integrity attacks aim to manipulate the measurement data to mislead control programs to send false control signals(details in Section III).

Figure 1. A generic CPS model.

2.2 Autoencoders and LSTMs

Autoencoders consist of encoders and decoders[21].Typically,encoders compress and represent the input layer to a low-dimension hidden (bottleneck) layer.Decoders reconstruct the output layer from the bottleneck layer.Deep autoencoders have multiple hidden layers.As illustrated in Figure 4,since there are few labeled data in CPS,the input and output layer of an autoencoder-based anomaly detector usually has the same dimension.The purpose is to achieve unsupervised learning.Also,compressing and reconstructing data could learn the essential characteristics and fundamental structures of sensor measurement data.If the reconstruction error (i.e.,the difference between reconstruction data from the autoencoder and input data)is above a threshold,an anomaly is detected.Also,autoencoders are robust to noise in the training data[22].

Long Short-Term Memory (LSTM) is one type of Recurrent Neural Network (RNN)[19],which has been broadly used in anomaly detection.In the CPS time series,an extended period of past measurements will impact the following future data.LSTMs can learn time relationships in time-series data,which are especially useful in CPS scenarios.This functionality is achieved through a forget gate[23],which is used to select certain past data to impact future data.Typically,an LSTM-based anomaly detector takes historical data as input and predicts future data values.If the prediction error(i.e.,the difference between predicted data from LSTM models and input data) is above a pre-defined threshold,an anomaly is detected.A time window (a fixed-size data sequence) is usually designed to inspect input data and conduct a detection action.Also,LSTMs can process multivariate timeseries data.High-dimension features and nonlinear interactions of features can be captured[24].Deep LSTM model stacks several LSTM layers to increase the ability to learn characteristics of data.

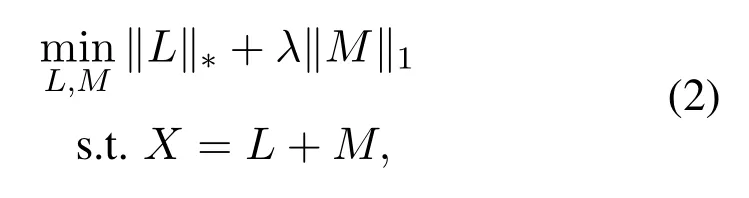

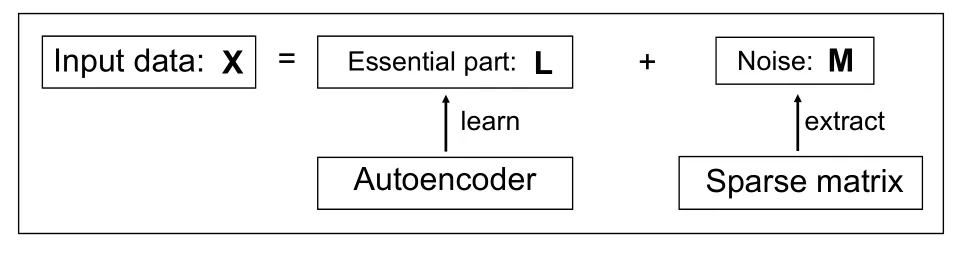

2.3 Robust Deep Autoencoders

The Robust Deep Autoencoder (RDA)[18]is inspired by Robust Principal Component Analysis(RPCA)[25],which is a modification of Principal Component Analysis (PCA)[26].RPCA divides an input matrixXinto a low-dimensional matrixLand a sparse matrixM,which can be represented as

Mis used to isolate outliers and noise that can not be represented well byL.Further,this matrix decomposition problem can be achieved by a relaxed optimization problem[27]:

where the‖·‖?is the nuclear norm and the‖·‖1is the?1one norm.In RPCA,the nuclear norm in Eq.(2)can be viewed as a linear projection tool to map the input to a low-dimensional representation.However,RDA uses a deep autoencoder to replace the nuclear norm.Thus RDA creates a non-linear projection to a low-dimensional representation.

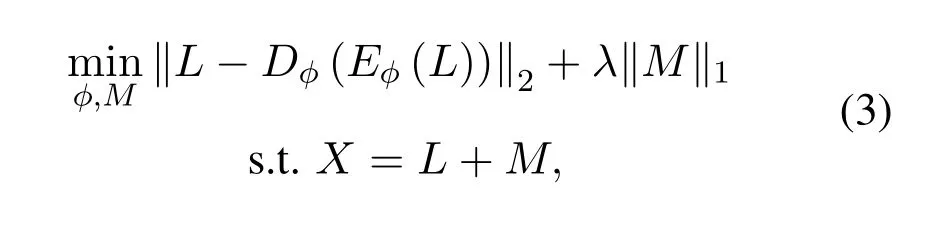

As illustrated in Figure 2,the key idea of RDA is that noise in the inputXcan not be represented well by low-dimensional layers in autoencoders(since noise is incompressible).Thus when noise is extracted and isolated intoM,the remainingLcan represent the essential part(i.e.,system states)of inputX.Similar to Eq.(2),the optimization problem of RDA becomes:

Figure 2. The workflow of a Robust Deep Autoencoder.It utilizes an autoencoder to learn the essential part of input data and a sparse matrix to extract noise.

whereE(·)is an encoder,D(·)is a decoder,andλis used to control how sparseMis.

First introduced in the image processing task,RDA has been proposed to train autoencoders on data with noise and outliers.In this paper,we utilize RDA to extract sensor and process noise contained in CPS sensor measurement data.

III.THREAT MODEL

In this work,we consider attackers aim to cause financial losses (e.g.,power outages) or physical damage (e.g.,damage devices such as centrifuges) to a cyber-physical system (e.g.,an industrial control system) through data integrity attacks.We assume that anomaly detectors are protected (e.g.,running on trusted execution environments) and not compromised.In this section,we first describe the ability of attackers.Then,we present a detailed definition of data integrity attacks.Finally,we discuss possible scenarios that data integrity attacks can be launched.

The ability of attackers.We assume attackers have the ability similar to those described in existing work[8,17,28,29].Namely,an attacker first can access and manipulate sensor measurement data in CPS.Even worse,this attacker may have knowledge of system dynamics too.Thus,we assume attackers know the architecture of the system as well.Also,we assume that attackers have the ability to learn sensor and process noise patterns.They can manipulate and inject false data that follow noise patterns.This is more stealthy than changing sensor values directly.Note that we do not consider sensor measurement replay attacks since noise has not been changed.

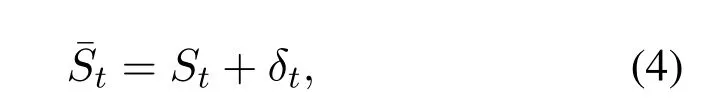

The definition of attacks.In this paper,we aim to detect Data Integrity Attacks(DIA).Different from control-oriented attacks,which often manipulate or inject false control commands to actuators,DIAs usually change sensor measurement values.Specifically,there are two types of DIAs:direct attacksandstealthy attacks.Typically,a DIA can be written as:

wheredenotes sensor measurements at timetofmdimensions,is attack values applied to original measurements,and ˉStis the sensor data received by control programs,which has been manipulated.With different strategies to addδt,there aredirect attacks(DA) andstealthy attacks(SA) respectively.We discuss the details of DA and SA in the following.

DA1:Each sensor measurement ofStis modified to a constant valuec,which can be defined asAttackers can setCto be one of the following scenarios.

·DA1-1.LetSabe the sensor value when the attack is launched,Ccan be set asC ?SaorC ?Sa.The attack causes sensor measurements to deviate from original values.

·DA1-2.LetSHandSLbe the upper bound and lower bound of measurement values,Ccan be set asC ?SHorC ?SL.The attack causes sensor measurements to overrun the operating boundaries of physical components.

·DA1-3.Ccan be set asSL ?CandC ?SHandC ≈Sa.The sensor values are in the range of measurement boundaries and close toSa.However,since sensor values are set to be a constant value,the pattern of gradual changing ofSt(e.g.,gradually increasing sensor values) is eliminated by the attack.

DA2:Instead of directly setting sensor measurements to a constant value,this direct attack gradually changes the sensor value.LetSabe the sensor value andais the time when the attack is launched.There are two scenarios.

·DA2-1.The sensor measurements can be set to gradually increase until the attack is stopped,which can be defined:is the changing rate,which controls how fast to increase sensor values.

·DA2-2.Similarly,the sensor measurements can be set to gradually decrease until the attack is stopped,which can be defined:a)?C.

DA1 and DA2 are direct attacks launched in the SWaT[30]testbed,which is a commonly used testbed in the CPS security research community.Our approach is evaluated on this testbed.In particular,we aim to detect stealthy attacks as discussed in the work[8].

SA:We assume a stealthy attacker knows the system dynamics of CPS.Also,this attacker can obtain history (not newly generated data) sensor measurement data,which could be used to learn the noise distribution of CPS.To avoid detection from a noise-based detector,the attacker could inject attack values consist of noise data instead of constant values used in direct attacks.However,we do not assume the attacker knows the current running status of CPS.Thus,although the attacker can inject noise data,the pattern of injected noise is inconsistent with the current system dynamics.Namely,the valid noise data is injected at the wrong time.A stealthy attack can be defined as:

whereare values chosen from past noise sequences that are corresponding to the noise distribution of sensors (noise pattern).is a rescaling factor to change injected noise.is a constant value added to the sensor measurement.Specifically,there are three scenarios in terms of differentαandβvalues.

·SA-1.The attacker injects noise without constant value,namelyα=0.So the attacked sensor data becomesThis attack will change the variance of the noise pattern.

·SA-2.The attacker injects only constant values without noise data,namelyβ= 0.So the attacked sensor data becomesThis attack does not change the noise pattern.However,as discussed in Section VI,merely adding constant values can be easily detected.So this attack is less used by attackers.Thus we do not consider this attack in this work.Nevertheless,we use an LSTM-based detector to inspect attackedvalues.

·SA-3.The attacker injects both noise and constant values,namely.

Attack scenarios.As illustrated in Figure 1,DAs and SAs can be conducted through two types of attacks.(1) Cyber attacks.Attackers can manipulate data between 1)sensors and control programs;2)SCADA and control programs.Specifically,attackers can conduct false data injection[10,31]attacks through Man-In-The-Middle(MITM)attacks[11].An attacker can be an insider or an outsider through cyber attacks.(2) Physical attacks.CPS can be geographically dispersed,so attackers may have physical access to sensors and actuators.Attackers can physically tamper devices to conduct data spoofing attacks[32].We do not consider attacks on actuators since noise data has not been manipulated.

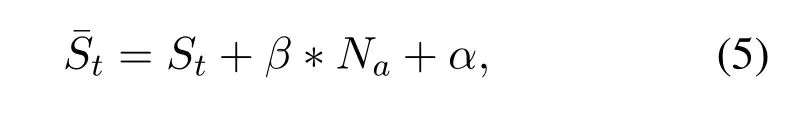

Figure 3. The workflow of DEEPNOISE.

IV.DESIGN

In this section,we present the design details of DEEPNOISE.First,we show the overall workflow of DEEPNOISE.Then,we present the details of each component of DEEPNOISE.

4.1 Overview of DEEPNOISE

As illustrated in Figure 3,DEEPNOISE mainly consists of three phases:Input data processing,Model training and testing,andOnline detection.

Figure 4. The architecture of the autoencoder.

Input data processing.Since sensor data is usually heterogeneous and in different scales,DEEPNOISE first applies data normalization to raw input data.Then,DEEPNOISE builds time window slices for each sensor time-series data.Anomaly detectors will check this slice and decide whether there is an anomaly.Finally,the preprocessed data is split into training and testing datasets.The training dataset consists of sensor data in a normal period and is used to train thenoise extractorandanomaly detector.The testing dataset is normal data but not used in the training phase and is utilized to validate that the models have been trained well and not overfitting.An overfitted model does not generalize well and may fail on unseen data.

Model Training and Testing.The model training phase consists of anoise extractorand ananomaly detector.For thenoise extractor,the preprocessed data is fed into a Robust Deep Autoencoder (RDA),when the training converges after certain rounds of iteration,input data is split into a system state matrixLand a noise matrixM.Then,LandMare sent to theanomaly detectorto detect data integrity attacks.For theanomaly detector,DEEPNOISE builds an LSTM-based neural network to learn the temporal dependence inLandM.DEEPNOISE utilizes past values to predict the current value.If the prediction error is above a threshold,it means that the noise pattern is changed and an anomaly is detected.Finally,this threshold is determined by trying different values(details in Section 4.4).We aim to achieve a balance between false positives and false negatives when selecting the threshold.The testing phase examines trained models on unseen data and prevents overfitting as introduced in the input data processing.

Online detection.After neural models in DEEPNOISE are trained well,DEEPNOISE can be deployed in CPS to detect anomalies in real-time.

4.2 Input Data Processing

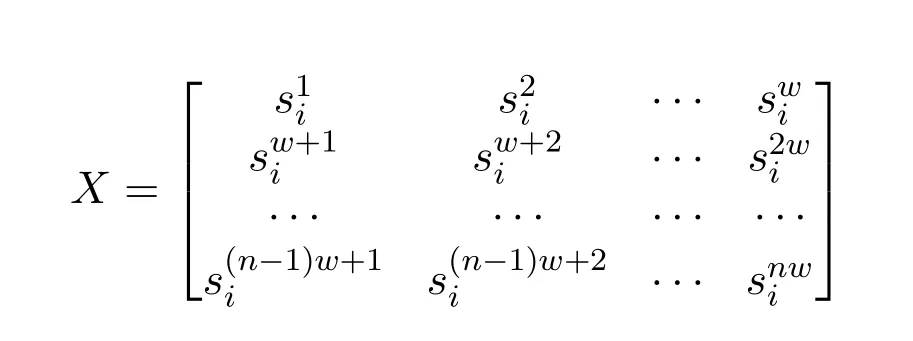

In CPS,the input data is usually multivariate timeseries sensor data.The input data can be written aswheremis the number of sensors andTis the length of data (time) points.For each sensor time series si,DEEPNOISE first applies a Min-Max scaler[33,34]to transform raw data to within the range of 0 and 1.To train an RDA,we need to transform time-series data si(one sensor)into a matrixX.DEEPNOISE splits siinto fixed-size time-window subsequences.Then,we use each subsequence as a row and stack them together to buildX.SoXis given by:

wherewis the size of the time window,nis(the number of rows).After preprocessing,Xis fed to thenoise extractorto extract system statesLand obtain noiseM.This process is applied to each sensor timeseries siaccordingly.

4.3 Noise Extractor

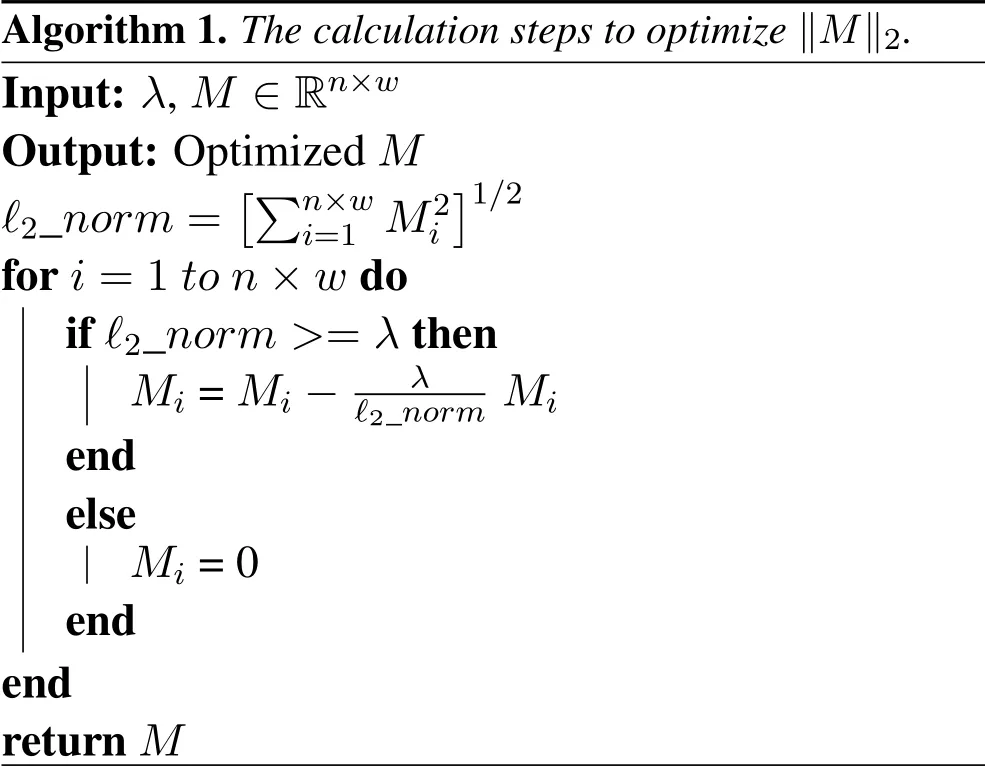

Noise extractortakes preprocessed dataXas input and utilizes an RDA to produce system statesLand sensor noise matrixM.We elaborate thenoise extractorfrom(1)the optimization of RDA;(2)the proximal method for?2norm;(3)the design of the deep autoencoder;and(4)the training of RDA.

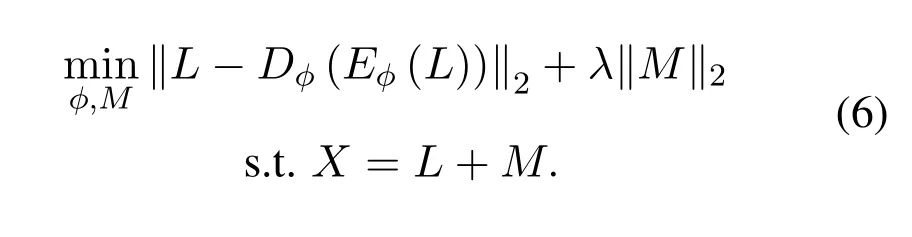

The optimization of RDA.As discussed in Section 2.3,RDA utilizes a sensor noise matrixMto extract noise and a deep autoencoder to learn the essential characteristics of input dataX.The penalty onM(‖M‖1) can be changed to other forms and does not necessarily to be?1norm.Different penalties can detect different anomalies and have different effects on the optimization process.Users can choose penalties based on their goals.For example,?2,1-norm is one type of regularization method,which calculates the?2-norm of columns of one matrix and then the?1-norm of the previous?2-norm result.It can capture structured outliers[18],e.g.,interactions among sensors.In this work,we choose‖M‖2and discard‖M‖1.The reason that we choose the?2-norm penalty is to let the sensor and process noise inMto be small(close to zero) but does not contain too many zeros(too sparse)[35].After experiments with two different settings,we validated that the?2-norm penalty is better than the?1-norm penalty to extract sensor and process noise in CPS.So the optimization of RDA becomes:

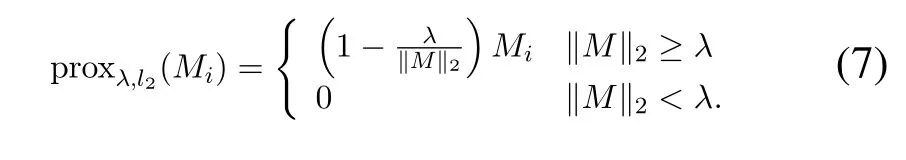

The proximal method for?2norm.During the training process of RDA,the?2-norm‖M‖2needs to be optimized efficiently,which can be achieved through the proximal operator[36,37]such as

λis the parameter that has been presented in Eq.(6)and is used to control the shrinking process ofMi(one element ofM).Intuitively,a largeλwould encourage data to remain inLand less data is isolated intoM.However,a smallλwould force much of the data to be separated intoM,which leads to fewer reconstruction errors in the deep autoencoder.The selection ofλdepends on different purposes.For the anomaly detection task in this work,we try to find a balance between small reconstruction errors and the fine distribution ofM.A good representation ofMwould help the LSTM-basedanomaly detector.The shrinking process is in Algorithm 1.Namely,the?2norm of theMis first calculated.Then,if this value is above theλ,each element in matrixM(Mi)is shrunk that is controlled by a scale factorOtherwise,the element value is set 0,which needs to be avoided.So the selection ofλis important and a properλis a key parameter for the performance of thenoise extractor.Certain iterations are accomplished to ultimately optimizeM.We show the impact ofλin the evaluation section(research question 2 in Section V).

Algorithm 1.The calculation steps to optimize‖M‖2.Input: λ,M ∈Rn×w Output:Optimized M?2_norm=images/BZ_206_1515_1207_1534_1252.pngimages/BZ_206_1534_1209_1582_1255.pngn×wi=1 M2iimages/BZ_206_1732_1207_1751_1252.png1/2 for i=1 to n×w do if ?2_norm >=λ then Mi=Mi ?λ?2_norm Mi end else Mi=0 end end return M

The design of the deep autoencoder.Given the optimization problem Eq.(6),a deep autoencoder is used to learn the essential characteristics of input dataX,which produces a matrixLto represent system states of CPS.Technically,E(·)andD(·)can be set in any form.In this work,DEEPNOISE adopts a standard deep autoencoder.As illustrated in Figure 4,the autoencoder consists of one input layer,one output layer,two encoder layers,one latent-space layer,and two decoder layers.The dimension of the input and output layer isw,which is decided by the dimension ofX.We have triedwranges from 60 to 200 and set 20 as one step.We find thatw= 120 can achieve a balance between training iterations and reconstruction losses.This represents that the noise extraction process is conducted for every 120 seconds of sensor data.Further,we set dimensionsd1,d2,d3to be 100,80,60 respectively to compress input data.All these parameters have been tested on different types of sensors,e.g.,water level and water flow sensors.Also,after trying different settings,we empirically set the hyperparameters of the autoencoder as follows.

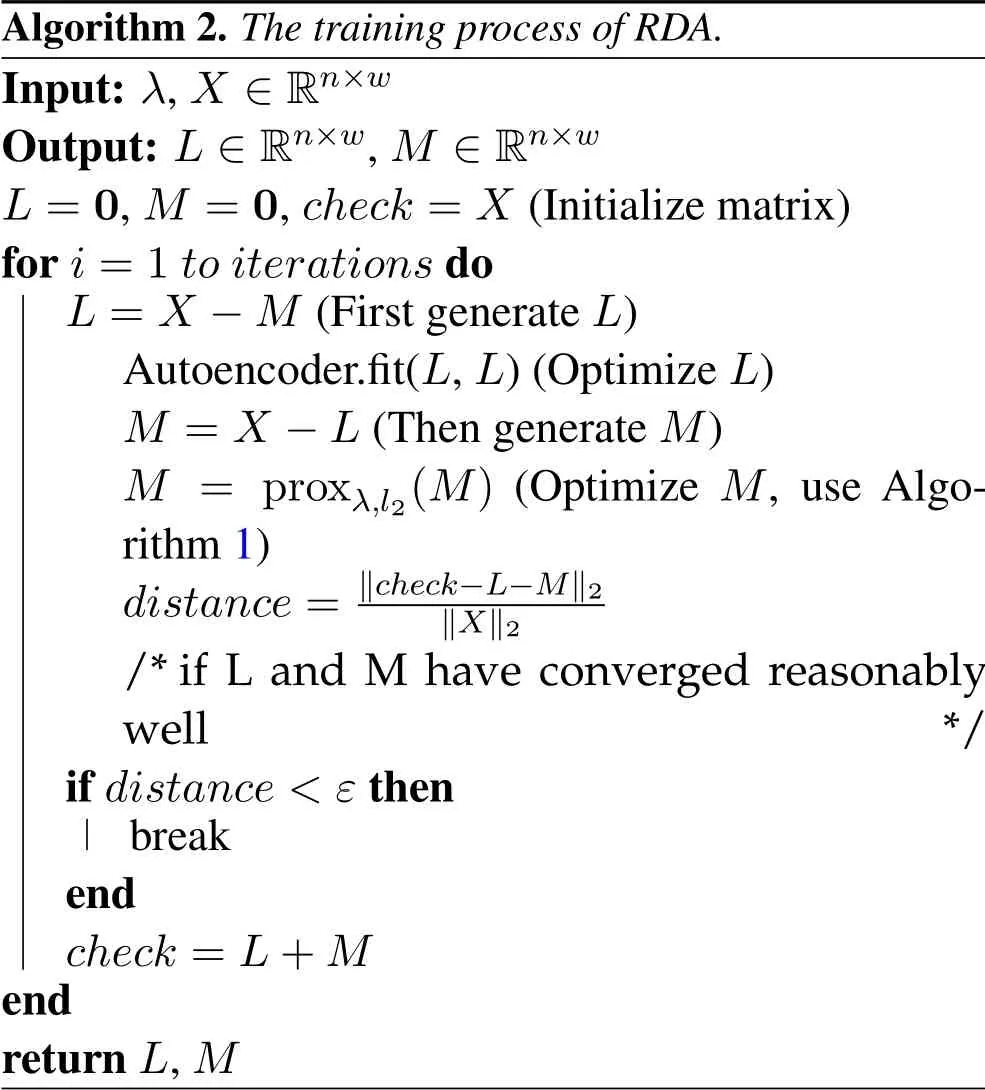

The training of RDA.Consider the optimization problem Eq.(6),we find that the autoencoder andhave to be optimized at the same time.Back-propagation[39]and the Alternating Direction Method of Multipliers (ADMM)[38]can be used to minimize the two parts in Eq.(6).In this work,we utilize the above two techniques to optimize the autoencoder and matrixM.The training process of RDA is in Algorithm 2.In each iteration,we first calculateLbased on the matrixM(optimized during the last iteration).Then,Lis fed into the autoencoder and optimized through backpropagation.Further,we subtractLfromXand getM.Using Algorithm 1,we keep optimizingM.Finally,ifLandMhave converged reasonably well,we use an early-stop mechanism and return results.Otherwise,all iterations are carried out to get the optimizedLandM.

4.4 Anomaly Detector

Algorithm 2.The training process of RDA.Input: λ,X ∈Rn×w Output: L ∈Rn×w,M ∈Rn×w L=0,M =0,check =X (Initialize matrix)for i=1 to iterations do L=X ?M (First generate L)Autoencoder.fit(L,L)(Optimize L)M =X ?L(Then generate M)M = proxλ,l2(M) (Optimize M,use Algorithm 1)distance= ‖check-L-M‖2‖X‖2/*if L and M have converged reasonably well*/if distance <ε then break end check =L+M end return L,M

After applying thenoise extractor,input data is split into a system state matrixLand a sensor noise matrixM(noise patterns are presented in research question 1 in Section V).Further,to detect data integrity attacks,DEEPNOISE utilizes an LSTM-based detector to capture temporal dependence inLandM.Note that the architecture of the detector forLandMis the same,but we deploy two detectors separately since the characteristics ofLandMare different.We use the detector forMto elaborate the details of the detector.The detector is trained on data in a normal period,where past values are used to predict the current value.When the prediction error is above a threshold (e.g.,when there are attacks),an anomaly is detected.In particular,we introduce the anomaly detector from these aspects (1) input data processing; (2) the design of the LSTM detector;and(3)the selection of the threshold.

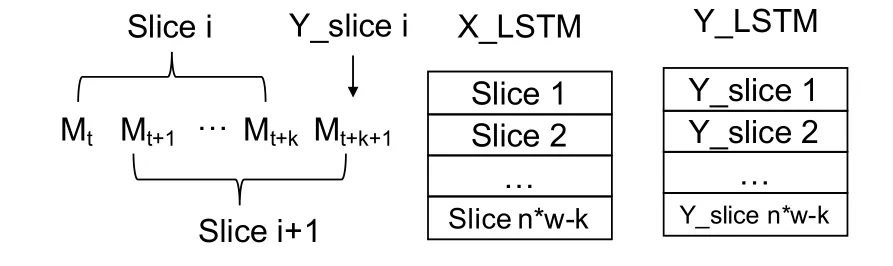

Input data processing.The detector utilizes past values to predict the current value,so we create timewindow slices as the input data to the detector.GivenMfrom the noise extractor,DEEPNOISE first flattensMto a one-dimension time-series arrayFM= (M1,M2,···,Mn×w),wherenis the number of rows andwis the size of the time window.Further,FMis split into time-window slices,where a slice is used to predict a current value.As illustrated in Figure 5,define aSlice i= (Mt,Mt+1,···,Mt+k),which is used to predictY_slice i=Mt+k+1.The length of one slice isk.The interval between the two slices is 1.So the next slice is (Mt+1,Mt+2,···,Mt+k+1).Finally,all slices are stacked together to form the input X_LSTM for the detector,and the target is denoted as Y_LSTM.The same process can be applied toL.

Figure 5. The generation of slices,X_LSTM,and Y_LSTM.

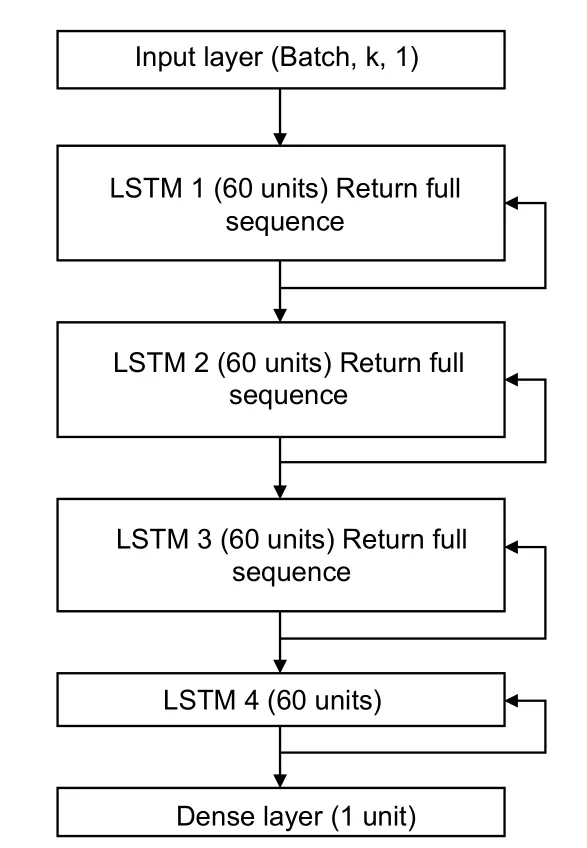

The design of the LSTM detector.The generated X_LSTM time slices in the normal period are fed to the LSTM detector,which can capture temporal dependence in the sensor noise.As illustrated in Figure 6,DEEPNOISE adopts four LSTM layers and each contains 60 units.For the first three layers,DEEPNOISE keeps the result of each time step (return full sequence).Finally,a dense layer is used to predict Y_LSTM.When there is an attack,large prediction errors will be produced.Thus,attacks can be detected.The same architecture of the LSTM detector is applied toLtoo.

Figure 6. The architecture of the LSTM detector.

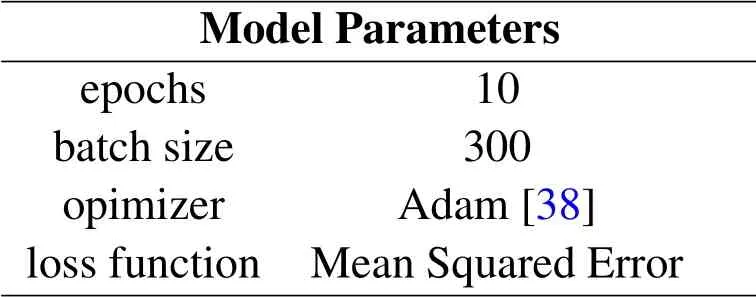

We also report the hyperparameters of the LSTM detector as follows after testing different options.

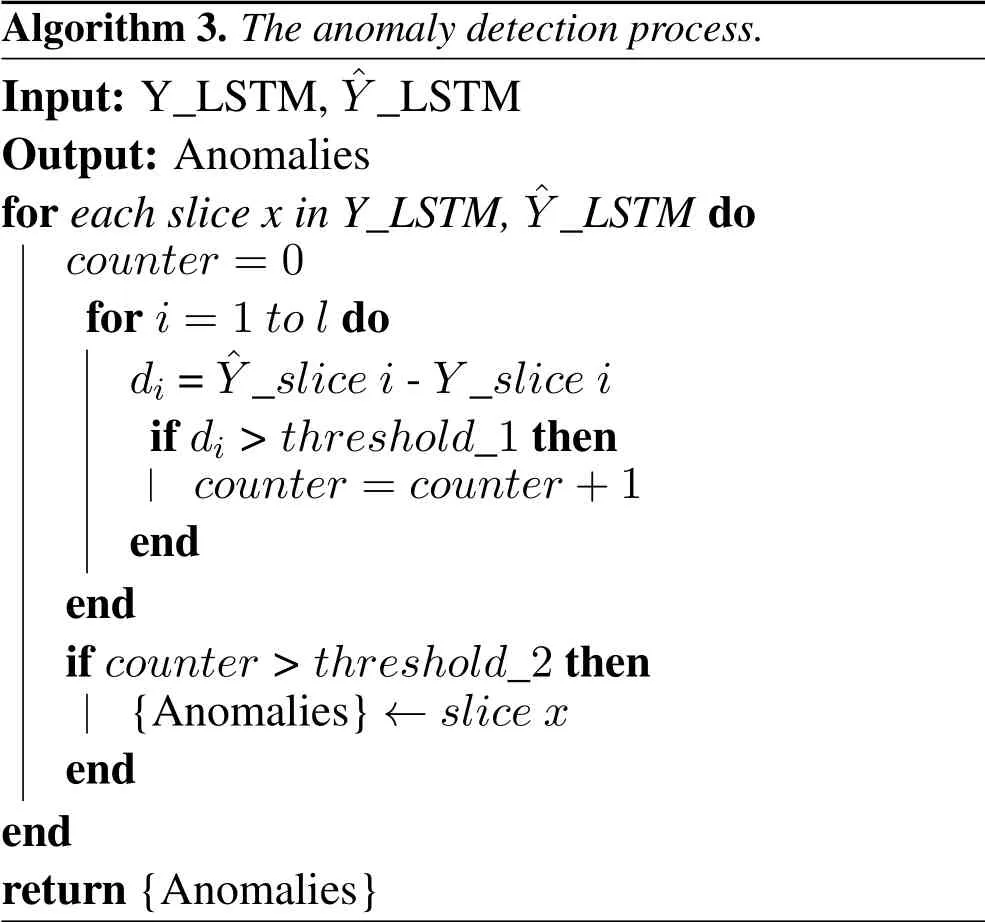

The selection of the threshold.DEEPNOISE utilizes a threshold to detect an anomaly.We denote the predicted value from the LSTM detector as ?Y_slicei.Then the differencedibetween ?Y_slice iand real valueY_slice ican be calculated.We use a detection window to calculate the threshold.Namely,we define a sequence of differencesD= (dt,dt+1,···,dt+l)as a detection window.FordiinD,ifdi >threshold_1,we add 1 to acounter.Then,ifcounter>threshold_2,DEEPNOISE determines that this is an anomalous detection window.Thethreshold_1 andthreshold_2 are empirically determined at the training phase on the normal dataset.These steps are described in Algorithm 3.

Algorithm 3.The anomaly detection process.Input:Y_LSTM,?Y_LSTM Output:Anomalies for each slice x in Y_LSTM, ?Y_LSTM do counter =0 for i=1 to l do di= ?Y_slice i-Y_slice i if di>threshold_1 then counter =counter+1 end end if counter>threshold_2 then{Anomalies}←slice x end end return{Anomalies}

4.5 Online Detection

Once thenoise extractorandanomaly detectorhave been trained on the normal dataset,DEEPNOISE can be deployed to detect attacks from newly created CPS measurement data.Such data can be real-time streaming data or offline datasets.In this work,DEEPNOISE is evaluated on the SWaT testbed.The details of SWaT is in Section 5.1.

V.EVALUATION

In this section,we show the effectiveness of DEEPNOISE with experiments using a real-world CPS dataset.First,we present the experimental setup of DEEPNOISE.Then,we conduct experiments to answer four research questions.The detection performance is compared with state-of-the-art methods.

5.1 Experimental Setup

Implementation.The autoencoder and LSTM neural models are trained on a desktop with Linux OS,3.70GHz Intel i7-8700K CPU,32 GB memory size.The machine also installs an NVIDIA GeForce GTX 1080 Ti GPU with 12 GB memory to speed up the training process.The deep neural models are implemented with the Keras[40]platform,which provides high-level APIs written in Python.The matrix operation and calculations are implemented with NumPy[41].

Testbed.We evaluate DEEPNOISE on a fully functional scale-down CPS testbed named Secure Water Treatment(SWaT)[30].This testbed takes raw water as input and utilizes chemical processes and physical filtering to generate clean water.Specifically,SWaT consists of six stages.First,the raw water is pumped into the testbed.At Stage 2,chemicals are added into water.Next,Ultrafiltration (UF) system,dechlorination using UV lamps,and Reverse Osmosis(RO)system are physical methods applied to clean the water.Finally,the backwash process is used to clean membranes in the previous filtering process.Six PLCs are used to control the above operations.Between PLCs and devices,there is a communication network to transmit measurement data and control signals.

Dataset.The sensor measurement and actuator control commands are recorded during the operating period of the testbed.There are 24 sensors and 27 actuators.The sampling rate is 1 second when 51-dimension data is recorded.The dataset consists of 7-days data in a normal period.Each sensor contains 496,800 data points.Meanwhile,the dataset collects data of 4 days that contain attacks.Each sensor contains 449,917 data points.In the attack period,36 attacks are launched.In this work,we consider data integrity attacks that manipulate sensor measurements.The details of the dataset can be found at[30].

5.2 Results

We conduct experiments to answer the following research questions:

·RQ1:Do data integrity attacks change the sensor and process noise?

·RQ2:What is the impact ofλin Eq.(6)to DEEPNOISE?

·RQ3:What is the performance of DEEPNOISE to detectdirect attacks?

·RQ4:What is the performance of DEEPNOISE to detectstealthy attacks?

RQ1:Do data integrity attacks change the sensor and process noise?DEEPNOISE assumes that data integrity attacks will change the sensor and process noise in measurement data.For example,the DA1 attack sets sensor values to a constant ˉSt=C.Thus,the noise pattern is changed.In this section,we validate this assumption with a visual representation.We compare the noise pattern of a normal period and under data integrity attacks to investigate whether the pattern is changed.Due to the page limit,we only present one attack here.However,we have checked all attacks on different types of sensors and the complete detection performance evaluation is in RQ 3 and 4.

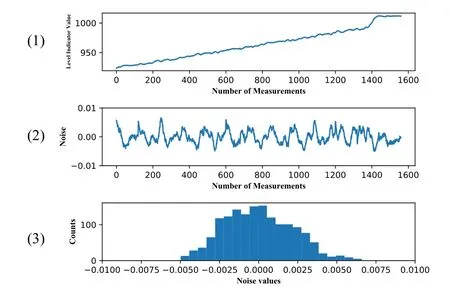

To this end,we randomly choose about 1,500 data points of a Level Indicator Transmitter (sensor LIT-301) sensor measurements from the normal period dataset.The LIT-301 sensor is used to monitor the water level of a water tank in stage 3 of the testbed.As illustrated in Figure 7,the top pane(1)shows the sensor measurements periodically increase and decrease due to the physical water treatment process.In the middle pane(2),sensor and process noise is extracted by DEEPNOISE,which represents the pattern of the noise in a normal period.Note that sensor measurements are normalized,so the noise values here do not represent the absolute values of noise in the physical process.However,the noise pattern is kept after the normalization.We show the histogram of noise values (probability density function)in the bottom pane(3).

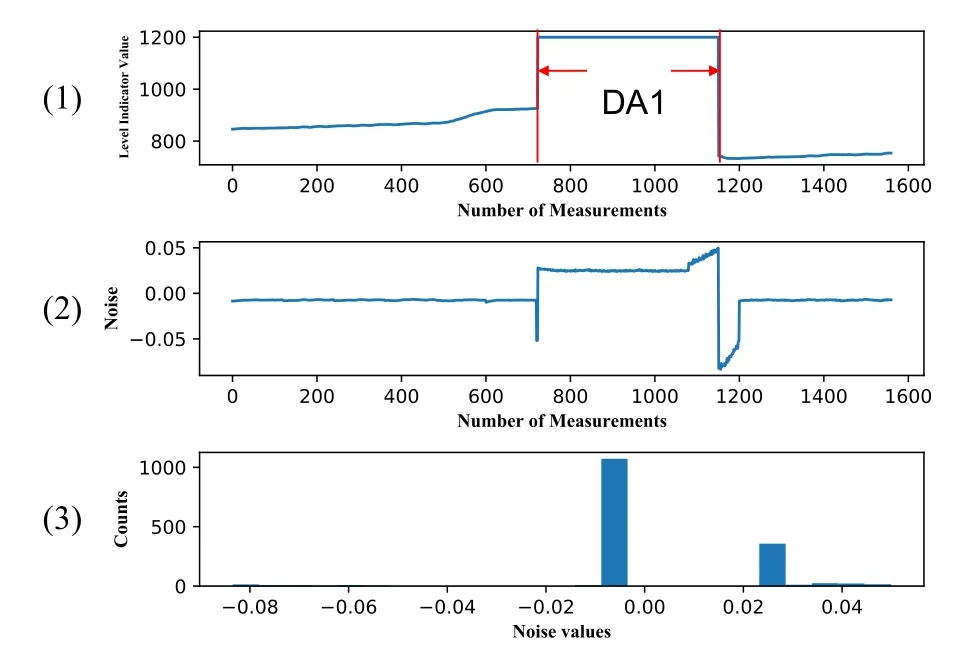

Meanwhile,we present the noise of the LIT-301 sensor under one DA1-1 attack (attack #7 in the SWaT dataset).This attack sets the water level to be higher than the normal value,which aims to stop the inflow to the tank and damage a pump.As illustrated in Figure 8,the sensor measurements between two red lines in the top pane(1)are set to a fixed value.As a result,the pattern of sensor and process noise in the middle pane(2) is abnormal,which is different from the normal pattern in Figure 7.Also,the histogram in the bottom pane (3) shows that the distribution of the noise has been changed.To automatically differentiate such change,DEEPNOISE utilizes an LSTM-based detector to capture the pattern of the noise.Note that the detection process is based on aSlice ias discussed in Section 4.4.Thus the noise data in Figures 7 and 8 are to give an overall picture of the data distribution.It is not used to detect this attack#7.Through this experiment,we validate that data integrity attacks change the pattern of sensor and process noise.

Figure 7. Level indicator LIT-301 sensor measurements and noise of a normal period.(1) Level indicator values;(2) Extracted noise (normalized); (3) Histogram of noise values.

Figure 8. Level indicator LIT-301 sensor measurements and noise of a DA1 attack period.(1) Level indicator values; (2) Extracted noise (normalized); (3) Histogram of noise values.

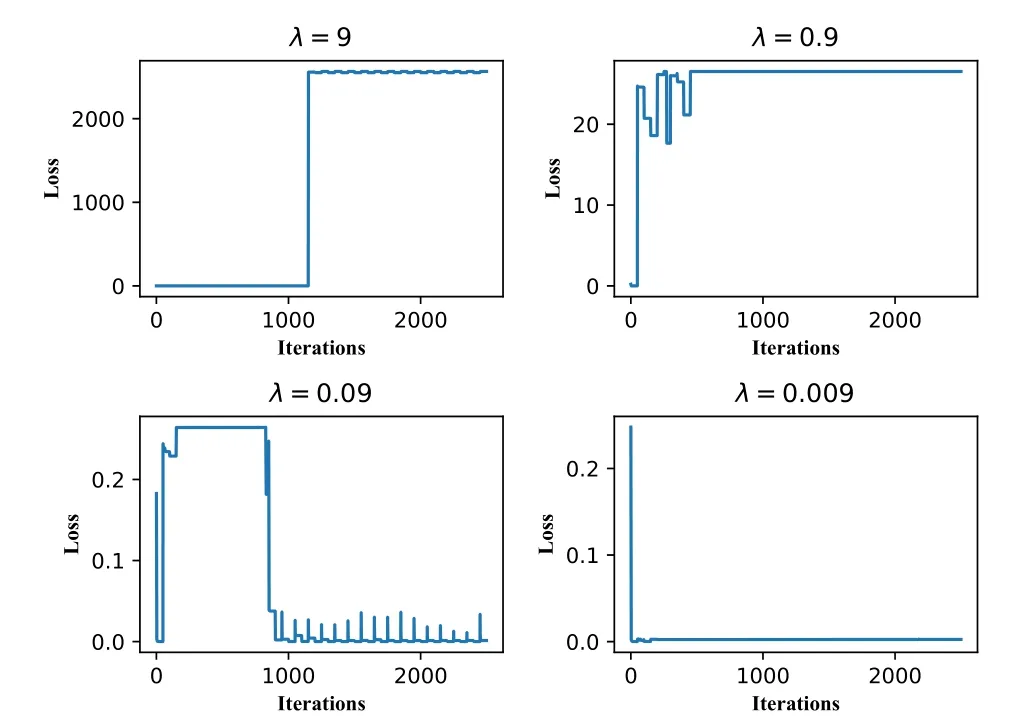

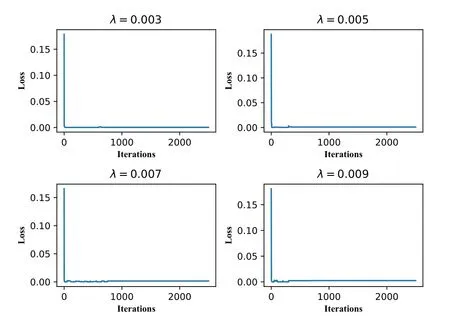

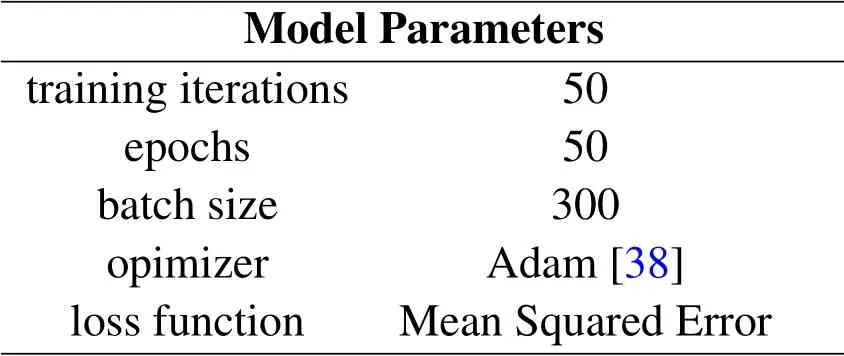

RQ2:What is the impact of λ in Eq.(6)to DEEPNOISE?As presented in Section 4.3,λimpacts the optimization ofMand the training process of RDA.To quantitatively show the impact ofλ,we calculate and present the losses of the autoencoder in RDA under differentλvalues.We use model parameters the same as those in Section 4.3.Namely,RDA is trained with 50 iterations.In each iteration,the autoencoder is trained with 50 epochs.We record the loss of the autoencoder after one epoch.As illustrated in Figure 9,we train the RDA to isolate the noise of level indicator sensor LIT-301 and setλto be 9,0.9,0.09,and 0.009.The purpose is to decide the proper magnitude ofλ.We find that a largeλ(i.e.,λ= 9,0.9,0.09) causes RDA hard to converge.It is because too much noise remains inL.The autoencoder can not learn the characteristics of noise data.After testing different values,the magnitude ofλis decided.We also investigate differentλvalues of the same magnitude.The purpose is to select the fine-tunedλvalues.As illustrated in Figure 10,the autoencoder converges at a similar speed.Thus,the choice ofλof the same magnitude can be tuned in terms of the characteristics of input data.In this work,we setλ=0.009.

Figure 9. The losses of the autoencoder in RDA under different λ settings,which impacts the convergence process of RDA.An appropriate λ is decided based on the characteristics of input data.

Figure 10. The losses of the autoencoder in RDA under λ of the same magnitude.

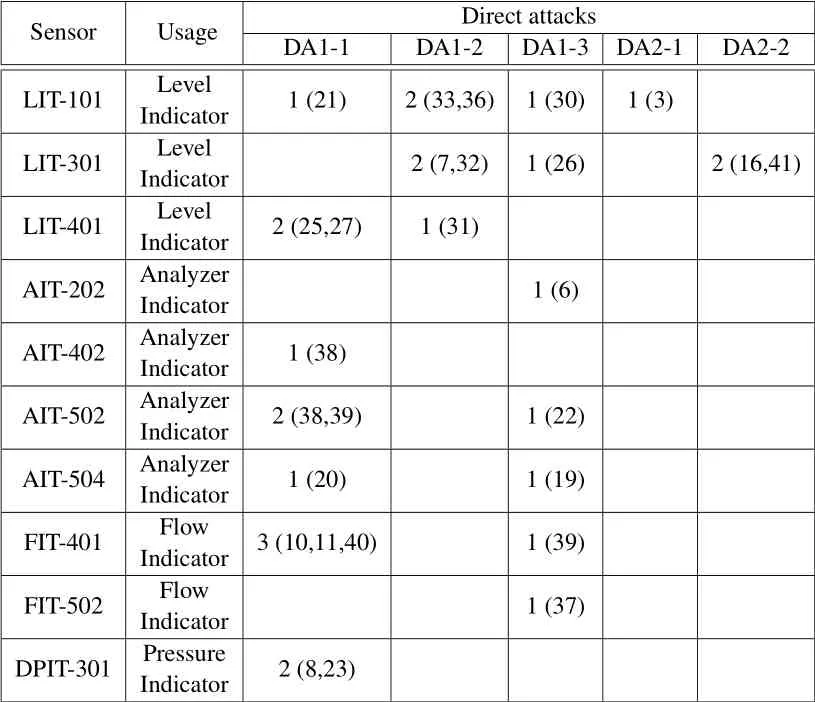

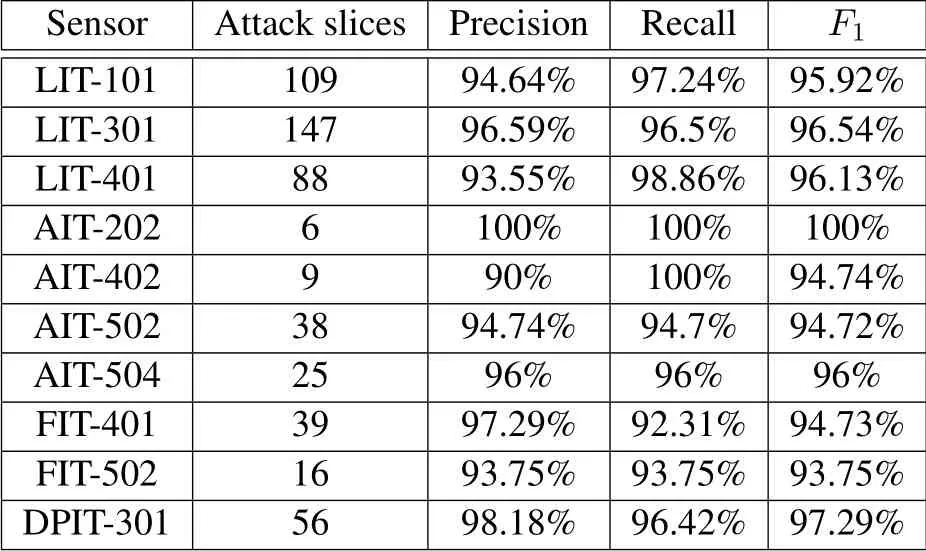

RQ3:What is the performance of DEEPNOISE todetect direct attacks?To measure the performance of DEEPNOISE to detect direct attacks,we first summarize all direct attacks in the SWaT dataset.As illustrated in Table 3,we find 10 sensors are under 27 DAs in the dataset.Note that one DA may impact several sensors so the total number of DAs is more than the number of attacks.We also present the original serial number of attacks.For example,2(33,36)denotes that there are 2 attacks and they are DA#33 and#36 in the dataset.All five types of DAs are included in the dataset.Further,we apply data pre-processing to the unseen attack data and feed the data to well-trained DEEPNOISE.The length of one slicekis set to be 30.We use precision,recall,andF1score to measure the detection performance of DEEPNOISE.Define True Positives (TP) as a slice in an attack period is detected as anomalous,False Positives (FP) as a slice in a normal period is predicted as anomalous,and False Negatives (FN) as a slice in an attack period is predicted as normal.The precision is given byTP/(TP+FP).The recall is defined asTP/(TP+FN).AndF1is calculated as 2?Precision ?Recall/(Precision+Recall).

Table 1. The parameters of the autoencoder.

Table 2. The parameters of the LSTM detector.

Table 3. Sensors and direct attacks used in the experiment.For example,2(33,36)denotes that there are 2 attacks and are number#33,36 in the SWaT dataset.

We illustrate the detection results of DEEPNOISE in Table 4.All DAs in the dataset are detected by DEEPNOISE.The detection performance of each sensor is evaluated on DAs to corresponding sensors.Overall,DEEPNOISE reaches a high precision and recall.On average,the precision is 95.47%,the recall is 96.58%,andF1is 95.98%.The average performance is the mean detection performance on all 27 direct attacks.

Table 4.The attack detection performance for DAs.All sensors are under DAs as described in Table 3.Attack slices are the number of slices in the LSTM detector module.Average results are calculated for all attacks on each sensor.

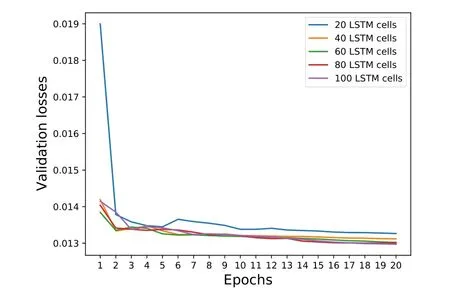

To investigate the impact of a different number ofLSTM cells,we calculate the validation losses of the LSTM model for each epoch under different LSTM cells.The validation losses are prediction errors of DEEPNOISE on the validation dataset.Specifically,we train the LSTM model for 20 epochs under 20,40,60,80,and 100 LSTM cells.In Figure 11,we illustrate the validation losses(prediction errors).We found that,in general,more LSTM cells generate fewer prediction errors.However,more cells will incur more training time.Therefore,users need to find a balance between detection accuracy and training overhead.

Figure 11. The validation losses of the LSTM model for each epoch under a different number of LSTM cells.

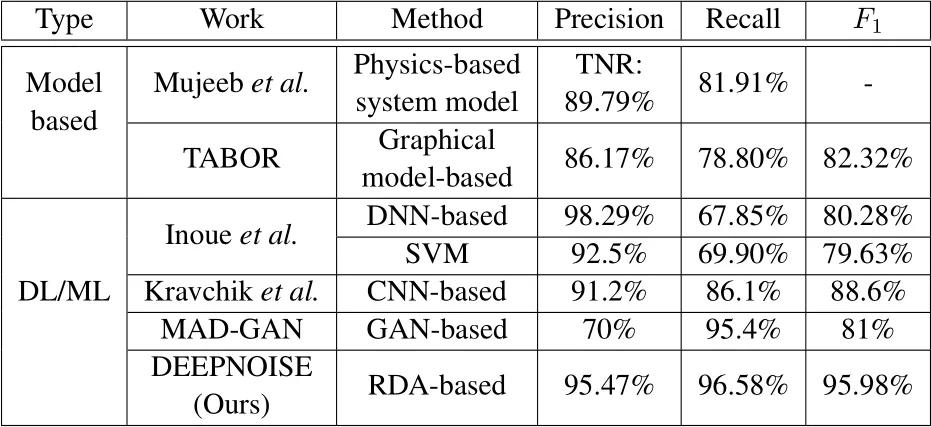

Also,we compare DEEPNOISE with state-of-the-art model-based and deep learning-based methods.These methods are all evaluated on the SWaT dataset and detect DAs as we described above.As illustrated in Table 5,we find DEEPNOISE outperforms existing methods.Specifically,DEEPNOISE achieves a balance between false positives and false negatives.The details of these methods can be found in Section VII.

Table 5. Performance comparison with existing work.Mujeeb et al.used a True Negative Rate(TNR)TN/(TN+FP)to measure the performance.All methods are evaluated using the SWaT testbed.DL denotes"deep learning"and ML denotes"machine learning".

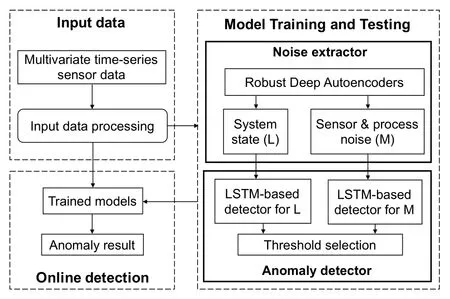

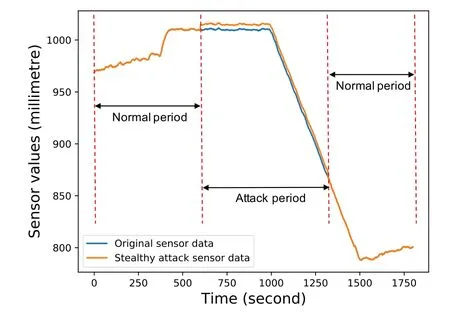

RQ4:What is the performance of DEEPNOISE to detect stealthy attacks?We evaluate the performance of DEEPNOISE to detect stealthy attacks (SA) as defined in Section III.Note that these SAs are also proposed in theNoise[8]work.However,the performance ofNoiseon SAs has not been evaluated.Also,to the best of our knowledge,we have not found the evaluation of detecting SAs from other work.Since there is no such SAs in the SWaT dataset,we simulate SAs based on normal data.We use an example to illustrate SA in Figure 12.During the attack period,we choose differentβandαvalues in Eq.(5)to generate different scales of noise.And the simulated noise is added to the original value.TheNais chosen from normal data that follows the distribution of sensor noise.We can see that there is no large sudden change in manipulated sensor values.Also,the manipulated sensor data are not constant values.

Figure 12. An example of a stealthy attack.

We set the attack period to be 100 minutes.In particular,we setβto be 2 andαto be 0,5,and 10.This combination is also used in theNoisework,which can be used to fairly show the performance of DEEPNOISE.It is worth noting that technicallyβandαcan be set as small as possible to remain stealthy.However,ifβandαare too small,such stealthy attacks can not cause the expected attack effect even though they can avoid detection.Thus we chooseβandαvalues to create a deviation of sensor values on the same scale as those in the direct attacks.We present the attack detection results in Table 6.DEEPNOISE achieves high precision and recall when detects SAs.We find that adding more noise will just slightly or will not impact detection performance.It is because once the noise is changed to be above the detection threshold,adding more noise is also above the threshold.Generally,DEEPNOISE achieves better performance to detect SAs than DAs.After manually examining the LSTM detector prediction errors,we find that injecting noise(SAs)causes the detector to generate larger prediction errors than removing noise(DAs).

Table 6. The attack detection performance for SAs.β is set to be 2.α is set to be 0,5,and 10.The performance is evaluated through precision,recall,and F1.

VI.DISCUSSION

The interpretation of the noise matrix M.Zhouet al.[18]proved that RDA is effective to extract noise into matrixMboth in theory and in practice.We argue that the values ofMmay not necessarily equal to noise values extracted from system-model based methods,especially when input values to RDA are usually normalized.Nevertheless,the matrixMcan be used to detect anomalies if the pattern ofMhas been manipulated by attacks.Also,we have investigated and found that control-oriented attacks (e.g.,open a pump) and component failures (e.g.,a pump is continuously running) will not change noise values.For example,for attack#2 in the SWaT testbed,Pump 102 is continuously running due to component failure and causes a decrease of the LIT-101 sensor.However,the noise pattern has not changed since the decreasing process is not manipulated and "normal".Although from the control attack and device failure perspective,when seen all these changes as a whole picture,it is an anomalous period.

The interaction among sensors.When applying DEEPNOISE to detect DAs,DEEPNOISE reported some anomalies that are not included in the attack details of the target sensor published by the dataset author.Tovalidate whether these anomalies are false positives of DEEPNOISE,we manually check the original values of the anomalous period.We find that these anomalies are caused by attacks on other sensors.These sensors are associated with the target sensor.For example,attack #22 stops UV-401 (an ultraviolet dechlorinator)and forces P-501(a pump)to remain on.But the description of attack#22 does not record the attack consequence to FIT-401 (a water flow sensor).However,we observe that the values of FIT-401 have been changed.The reason is that FIT-401 records the water flow to UV-401 and thus is affected.We also observe such interaction between level sensors and flow sensors.This illustrates that there exist physical connections between sensors.The DA to one sensor may impact other sensors.This observation is useful when designing future anomaly detection methods.

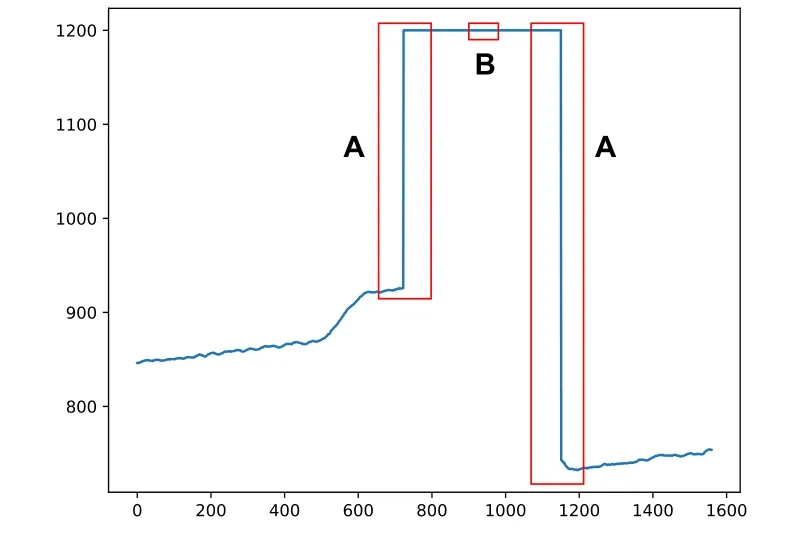

The impact of large δt to the LSTM detector.DA1-1 and DA1-2 inject large deviationsδtto the original sensor data.Thus the noise pattern of normal data is changed by DAs.DEEPNOISE utilizes an LSTM detector to inspect the extracted noise.We find that the LSTM detector performs differently to different periods of DAs.As illustrated in Figure 13,period A and B are two different stages of DA1-1.Period A creates a sudden and large change to the original data.However,period B generates a steady change.When we check the detection performance of DEEPNOISE,we find that period A can be easily identified by the LSTM detector.However,there are few false negatives in period B.This is because the LSTM detector inspects time relationships among noise data,which means the middle period of B may be classified as normal values.This issue may be solved by adding more features when utilizing the LSTM detector.For example,the mean and variance of noise can be used to detect anomalies.

Figure 13. Different periods of direct attacks.A denotes sudden changes to the original values.B denotes small changes.

Sensor fingerprint and authentication.We find that the mean and variance of sensor and process noise of different sensors are different.This indicates that noise can be used as fingerprints of sensors.Indeed,Mujeebet al.[8]utilize noise to generate fingerprints of sensors.However,more features may be needed to build fingerprints(mean and variance are not enough).In particular,manually designed features (e.g.,skewness,kurtosis) or specialized neural networks (e.g.,CNN-based neural networks) can be utilized to produce fingerprints.Once fingerprints are created,an authentication mechanism can be designed to prevent data-based attacks.False and manipulated data can be removed from CPS(e.g.,PLCs can check all input data).

The design of autoencoder and LSTM.We utilize an RDA to extract the noise from sensor measurement.To show the feasibility of DEEPNOISE,the autoencoder in the RDA adopts a naive design,which stacks basic encoder and decoder layers.The experiment shows that this autoencoder can capture the characteristics of input data.For the anomaly detector,as our goal is to detect anomalies of each sensor,we choose to stack four LSTM layers to capture the time relationships of noise data.However,since there are interactions among sensors,a more complex design can be used to capture the physical relationships among sensors.For example,a CNN-based neural network can learn the interactions of sensors from multivariant noise timeseries data.We will investigate other detecting designs in future work.

Deployment of DEEPNOISE.DEEPNOISE can be trained offline where the computing resources are rich.The training data can be extracted in the normal operating stage of CPS.Further,the trained DEEPNOISE can be deployed in CPS to detect anomalies in real-time.Users can update the trained neural models regularly.Also,if the computing resource is powerful in certain CPS components,online training and updating can be adopted to reduce human effort.

VII.RELATED WORK

Anomaly detection in CPS is not a new research problem.However,in this work,we combine the physical properties of CPS and deep learning methods to investigate automatically learning the intrinsic characteristics of CPS to detect anomalies.Previous research explored detecting anomalies from building system models of CPS and utilizing neural networks.Also,some research efforts studied interactions between the physical domain and cyberspace.In this section,we summarize related work from the above aspects.

Physics-based system model methods.Mujeebet al.utilized a Kalman filter to build a system model of CPS.Then,noise (residual) data were extracted from the sensor measurement.For the extracted noise,the authors explored statistical-based(the Cumulative Sum and bad data)[28]and machine learning-based(SVM)[17]attack detectors.Also,they proposed a device authentication mechanism by obtaining the noise fingerprint of each sensor[8].Mujeebet al.also investigated process offset by calculating the differences between state estimations and real measurements[42].They defined process skew as the changing rate of linear regression on process offsets.Finally,the process skew is used to detect attacks.Aoudiet al.utilized a singular spectrum analysis to build a system model for the physical process in CPS[7].An attack will cause sensor measurements to depart from the cluster under normal operation and thus attacks are detected.Krotofilet al.used entropy metrics to monitor the status of sensors[9].Spoofed sensor measurements will fail to pass the correlation entropy-based plausibility and consistency inspections.The method was evaluated on the Tennessee Eastman challenge process.By comparison,DEEPNOISE adopts an RDA to isolate sensor and process noise from normal sensor measurements,which does not need the manual effort to build system models.

Deep learning-based anomaly detection methods.LSTM-based methods are used to capture the temporal dependence of measurements.Fenget al.proposed a two-step strategy to detect anomalies in industrial control systems[23].First,a lightweight Bloom filter[43]was deployed to pick anomalous traffic packets efficiently.Then,a stacked LSTM detector was utilized to detect packets that are sent by attacks.The time relationships of packets are changed by cyber attacks.In addition to the time relationship,Suet al.also considered learning the stochasticity in multivariate time-series sensor data[44].They used a VAE[45]to map raw input into stochastic variables.Further,these variables are connected to time relation representations that are learned by GRU variables.Finally,both stochasticity and time relations were used to detect anomalies.CNN-based methods are used to capture interactions among different sensors.Zhanget al.[16]utilized convolutional LSTM neural networks[46]to learn both the correlations of sensors and temporal patterns.Autoencoders are used to reduce dimensions and achieve unsupervised learning.Zhanget al.applied stacked autoencoders to inspect multivariate measurement data in the smart grid[47].Finally,Liet al.[48]explored Generative Adversarial Networks(GANs)[49]to capture the spatial-temporal correlation of sensor data.Both the generator and discriminator were used to detect anomalies.DEEPNOISE also utilizes an LSTM neural network to capture time correlations of noise data.Instead of inspecting raw input directly,DEEPNOISE extracts sensor and process noise to detect data integrity attacks(identify the root cause of anomalies),which achieves better interpretability and utilizes the intrinsic nature of CPS.

Ensure interactions between physical devices and cyberspace.Dinget al.[50]discovered that apps in IoT can interfere with other apps through physical channels.They developed a framework to inspect the interactions of apps in physical space.They found 37 interaction chains that can possibly cause safety violations.Wanget al.[51]enriched such interaction rules and found that apps also interact in cyberspace.Satisfiability Modulo Theories were used to build a model to discover interaction rules.They found 66%of app deployment combinations are vulnerable.Alhanahnahet al.[52]also identified the interaction problem and propose a formal specification method to discover violations.A strategy was utilized to avoid the state explosion problem.They derived 29 safety and security rules and many rules were not previously derived.Zhouet al.[53]extended the interactions to smart apps,devices,the mobile app,and the cloud.Therefore,more types of vulnerabilities had been discovered.Concretely,they discovered that there are vulnerable logic interactions among these stakeholders.Finally,IOTGUARD[54]injected extra protecting code into the source code of apps.The code was used to dynamically enforce safety policies at runtime.The overhead of the method was 17%on average.

VIII.CONCLUSION

Security hazards in CPS endanger the properties and lives of users.Requiring expert knowledge and interpretability issues impede anomaly detection methods effectively protecting CPS.In this work,we utilized a robust deep autoencoder to automatically extract sensor and process noise from measurement data,which does not assume prior requirements and expert knowledge for CPS.Using noise to detect anomalies also achieves reasonable good interpretability in the CPS scenario.Further,we designed an LSTMbased detector to examine the noise data.Also,the change of noise indicates the root cause of anomalies is data integrity attacks.The evaluation on a realistic testbed suggests that our work outperforms state-ofthe-art methods.

ACKNOWLEDGEMENT

This work was supported by National Natural Science Foundation of China (No.62172308,U1626107,61972297,62172144).

- China Communications的其它文章

- Correlation-Aware Replica Prefetching Strategy to Decrease Access Latency in Edge Cloud

- Secure Transmission in Downlink Non-Orthogonal Multiple Access Based on Polar Codes

- M2LC-Net:A Multi-Modal Multi-Disease Long-Tailed Classification Network for Real Clinical Scenes

- Beamforming Optimization for RIS-Aided SWIPT in Cell-Free MIMO Networks

- Security Risk Prevention and Control Deployment for 5G Private Industrial Networks

- Cost-Minimized Virtual Elastic Optical Network Provisioning with Guaranteed QoS